AI Generated Content and Academic Journals

What are good policy options for academic journals regarding the detection of AI generated content and publication decisions?

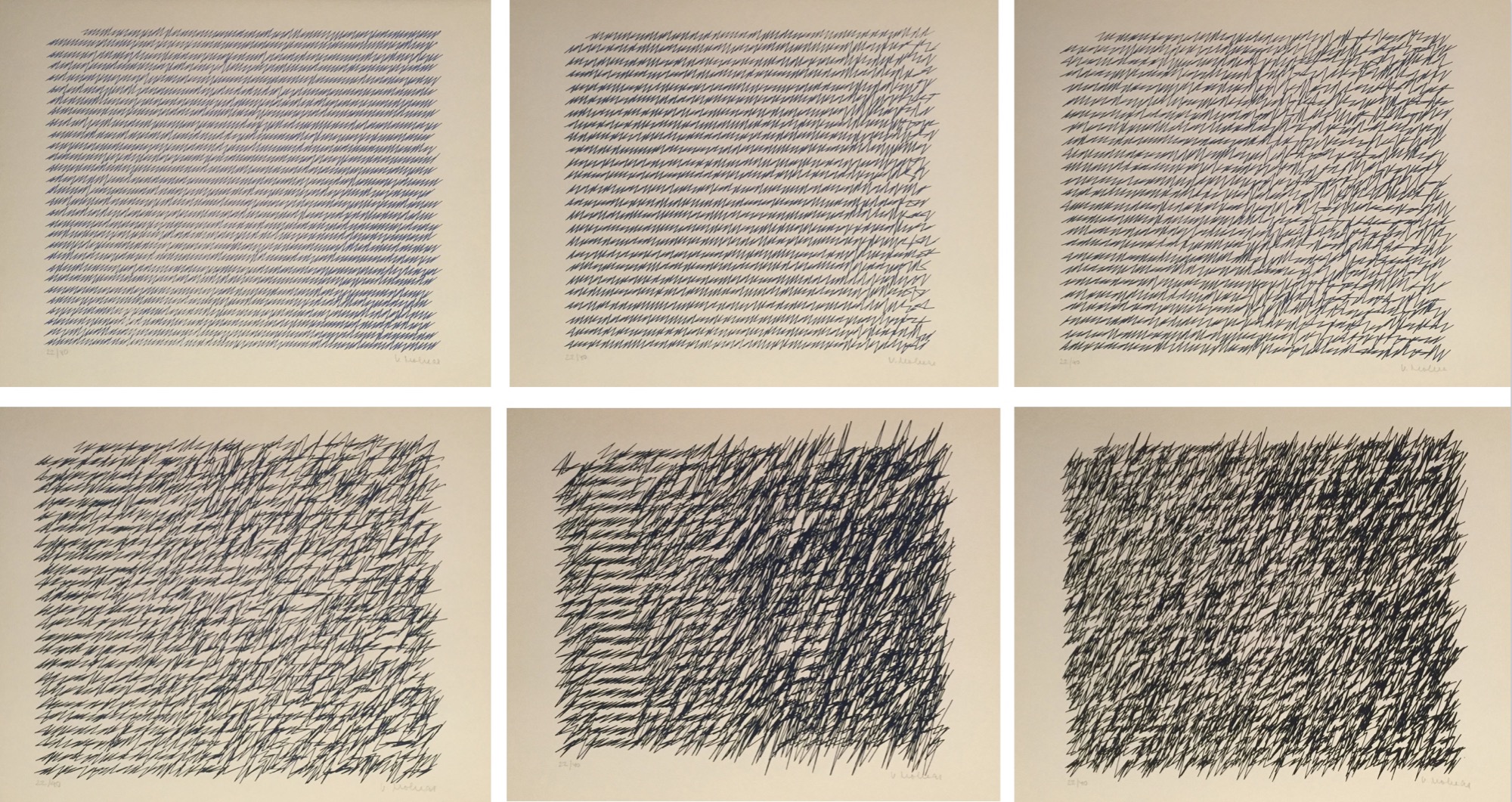

[Vera Molnar, “Lettres de ma mere”]

They’re interested in learning about what policies, if any, other journals have instituted in regard to these challenges and how they’re working, as well as other AI-related problems journals should have policies about.

They write:

As associate editors of a philosophy journal, we face the challenge of dealing with content that we suspect was generated by AI. Just like plagiarized content, AI generated content is submitted under false claim of authorship. Among the unique challenges posed by AI, the following two are pertinent for journal editors.

First, there is the worry of feeding material to AI while attempting to minimize its impact. To the best of our knowledge, the only available method to check for AI generated content involves websites such as GPTZero. However, using such AI detectors differs from plagiarism software in running the risk of making copyrighted material available for the purposes of AI training, which eventually aids the development of a commercial product. We wonder whether using such software under these conditions is justifiable.

Second, there is the worry of delegating decisions to an algorithm the workings of which are opaque. Unlike plagiarized texts, texts generated by AI routinely do not stand in an obvious relation of resemblance to an original. This renders it extremely difficult to verify whether an article or part of an article was AI generated; the basis for refusing to consider an article on such grounds is therefore shaky at best. We wonder whether it is problematic to refuse to publish an article solely because the likelihood of its being generated by AI passes a specific threshold (say, 90%) according to a specific website.

We would be interested to learn about best practices adopted by other journals and about issues we may have neglected to consider. We especially appreciate the thoughts of fellow philosophers as well as members of other fields facing similar problems.

— Aleks Knoks, Christopher A. Vogel, Fabienne Forster, Marco R. Schori, Mario Schärli, Máté Veres, Matthias Egg, Michael McCourt, Philipp Blum, Simone Olivadoti, Steph Rennick

Discussion and suggestions welcome.

Is there published, independently funded research on the effectiveness of “AI” detection systems? If not, journals cannot ethically rely on them. In this hype-filled space, one cannot trust assertions of effectiveness unsupported by published evidence, and there is reason to discount industry-funded research.

Even if a journal editor cannot prove that a submission was generated by a large language model, it would be perfectly reasonable to reject a submission on the ground that it has the derivative quality of LLM-generated writing.

I am not sure what would be unethical about using experimental tools as a stop-gap, especially when journals could, for example, allow for appeal.

The trouble is that (i) practically anyone in the world can submit an AI article, (ii) it costs them nothing to do so, yet a minute chance for prestige if their article slips through, and (iii) journals are already flooded with more work than they can handle. If journals get deluged by waves of AI-written essays, but you insist that each must be read and thrown out on the merits, I fear our already tenuous system will crumble under the weight.

It’s unethical because they are not just imperfect, but pretty much bogus.

Is this true? I have no idea but if someone with expertise would be willing to weigh in I’d really appreciate it. When I’ve played around with them they were quite good at picking out what is and isn’t AI.

As a student who is not a first language English speaker, I write my manuscript on my own, the use AI for better sentence construction. The AI tools helps a lot in consolidating my argument. Is that still unethical? I am asking as an emerging researcher and PhD candidate.

It is unethical for the same reason it is unethical to use untested drugs as a stop-gap. There is no good reason to believe that they work. The enthusiasm and supposed expertise of their creators is not sufficient reason to rely on them.

Not published or large-scale, but the Academic Conduct people at my employer have just put all the cases where students have admitted to us that they used AI through a “checker” to see how they compared with a random sample.

“A bit better than a coin-toss, but not much” is how the results were described to me.

The worry is not about its positive predictive power (sensitivity) but the risk of false positives. And even then it’s not just about the false positive rate, but specifically that submissions from certain groups (e.g. non-native speakers, neuro diverse, etc) would be more often the victim of false positives than other groups. In other words: bias.

Since these detection algorithms are pure black boxes, based on vague detection of certain vague linguistic patterns (low information density, use of overly grandiose adjectives, highly linear sentence structure, etc), there’s no way for the detection algorithms to give any concrete proof or even just a justification for why it thinks the content is AI-generated.

I feel anyone who applies this kind of algorithm should at the very least have a solid grasp of how to benchmark and test ML models and what the difference is between sensitivity, specificity, precision, recall, etc.

It’s unlikely that AI systems will reliably distinguish AI-generated from human texts. Any new detection trick will be matched by AI generation trick.

Train your own editor and reviewer bots. /s

More seriously, the technological landscape continues to change rather rapidly. What seems likely to happen is that different journals will adopt different strategies and we will all witness the different outcomes. Of course, because things continue to change, a strategy that works at one point in time may not work at another.

If an AI generated article is able to pass peer-review then this indicates either: (a) a problem with the journal’s peer review system (b) a problem with the journal’s selectivity and/or (c) a problem with the subdiscipline (e.g., the kind of research that is deemed acceptable within that subdiscipline).

I don’t think we should retract AI-written articles that pass through peer-review though if we notice or suspect that a large number of articles in a journal or subdiscipline are AI generated then we should definitely reconsider either the journal, the subdiscpline’s standards for scholarship, or both. Even in the most optimistic descriptions of their capabilities at the moment AI articles can pick the low hanging fruit. I say we let them have it! Low hanging fruit is worth picking, after all, but I’d rather we spend human efforts on more human-unique pursuits.

Having said that, if you want to curb the number of junk submissions (AI generated or not) then there are a few possibilities worth trying. These include (1) requiring an institutional affiliation/email address for submissions and limiting the number of submissions that each institutionally affiliated account may submit to a journal in a given period of time (2) charging a nominal fee for submissions (literally something like $1 USD) to cut back on spam submissions.

I’m sure that there are other such ways of cutting back on spam submissions and they all have drawbacks (option 1 obviously disadvantages independent scholars and option 2 disadvantages those without access to credit/debit card information) but these drawbacks may be worth it if AI articles really do become a serious problem.

You forgot case (d) the AI is just as competent as the humans whose papers pass peer review.

I’m sorry, Eric, but I’m a large language model. I cannot make claims about my superiority to humans.

In all honesty, if we do get to a point where (d) is true then I think we, like chess grandmasters, will have to have a think about what the point of our game is. Whatever it is that philosophy is or about, so long as it’s not a purely aesthetic and narcissistic project, we should be worried about the day we can offload that work to non-humans.

If computers can do philosophy as well, or better, than we can then either we admit that we’re just philoso-speciesists, that we enjoy inferior but human-made philosophy, or we voluntarily hang up our hats and let computers do our philosophizing for us. At least for now though I think (a) – (c) are the more likely.

Scientists and mathematicians don’t take that position. They are eagerly employing AI to solve problems that they can’t solve, or to solve them faster. They don’t fear AI putting them out of business.

And the analogy with chess isn’t quite right. Grandmasters haven’t abandoned chess or thought of their chess as “inferior”. Moreover, human-computer chess teams (“centaurs”) are thriving, and exploring entirely new approaches to chess.

Philosophy might benefit greatly from AI. As a whole, analytic philosophy is a chaotic mess of tiny shards of logic that seem utterly disconnected from each other, so the whole field looks like grass counting. Perhaps AI could put some systematicity into that mess.

“They don’t fear AI putting them out of business.”

Why not?

Because they are actively using it to solve their present problems, many of which are too difficult for humans to solve, often because of impracticalities. (Lots of theorem-proving is just low-level searching through a vast landscape of possibilities and specific cases, same with science, and AI speeds that up considerably.). Scientists and mathematicians don’t fear running out of problems.

But if the AI is able to solve problems that are too difficult for humans, then it is presumably even easier (and efficient) for that AI to solve problems that are not too difficult for humans. Even if there are infinitely many problems to solve, the fact that AI can solve them more readily than humans can, and for far less money (if any) than a professor is paid, seems to constitute a real threat.

An AI is like a power saw. It’s faster and more powerful than a hand saw. Neither saw understands why you are interested in building a house.

Perhaps that’s true. But show me a mass-produced power saw that can produce an entire house within seconds after some random person brings it to the job site and press a few buttons on it, and I’ll show you a big reason for construction workers and other tradesmen to be very worried about their line of work.

“Whatever it is that philosophy is or about, so long as it’s not a purely aesthetic and narcissistic project, we should be worried about the day we can offload that work to non-humans.”

I would think that if it *is* a purely aesthetic and narcissistic project, then AI being able to do it as well would be worrying. But if it’s a project that is intended to be valuable in other ways, by generating truth or understanding or whatever, then we should be welcoming the time when AI can help us do it better, or perhaps even do it better than us.

Hard work. I found flows in every article I suspect as AI generated, such as papermills: a title that is so confusing that you cannot understand it’s contribution, conclusions that are supported by undefined metrics, an abstract that says nothing of value about the paper’s contribution, use of multiple data analysis techniques that are not synthesized, and the worst is left to the final note of lacking dataset description for the nice looking generated figures.

Two points: (i) philosophy journals are slow to issue verdicts, so I’m surprised to see effort expended on this issues instead of, say, speeding up other processes; and (ii) if a journal doesn’t want AI-generated text in its pages, it should simply add another tick box, asking authors to confirm there is no AI-generated text in the submission. Frankly, there a bigger issues that journals face…

Maybe there are some current legal norms that judge that LLM-produced and human-directed (via prompts) text isn’t authored by the human, but why does that matter that much? (Is there a big intellectual property contract thing? Like OpenAI is unwittingly signing away IP when a philosopher gets an LLM paper accepted at a journal?) I’m very lost as to what makes an LLM-produced and human-directed paper something journals should filter out. If the philosophical content is sufficiently good, why should it being LLM-produced and human-directed disqualify it? Are journals scared that the referee quality is so poor that a bunch of bad LLM papers will get accepted? Is it just reactionary parochialism? Can someone please tell me what the motivation is for screening out LLM papers?

Because journal editors and peer reviewers are generally unpaid – it is done as a service role. So if the volume of papers becomes very large, you need a way of filtering what the reviewers spend time on. Or you could give up filtering, but that’s probably a fast track to zero value.

Why filter by whether AI was involved in generation or not? We should develop some better ways to do quick filters by simple estimates of quality (whatever “quality” means), not by who created it. I presume that no one would suggest filtering out all papers by authors from developing countries, just because authors from developing countries are a quickly rising fraction of new submissions and the system was originally designed to function only with the relatively smaller number of submissions by authors from wealthy countries.

Not sure I understand (all of) the motivations behind this.

If a paper “written by” an LLM (whatever precisely that means—something like “a paper in the production of which an LLM figured in some inappropriate way”) is accepted for publication at Journal X, this seems to entail, at a minimum, that the paper was good enough by Journal X’s lights (or the lights of reviewers and operative members of Journal X’s editorial team). Either Journal X’s standards are appropriately high, or they are not. If they are, then I fail to see the problem with the publication of a good LLM-written paper—at least from a scholarly and moral, if not legal, point of view. On the other hand, if the paper was accepted only because Journal X’s standards are too low, the most fundamental and pressing problem is with the Journal’s standards. To an extent, the fear—if I’m correctly interpreting things—that LLM-written papers will slip through would seem to indicate a concern that the Journal’s standards are too low.

Perhaps the underlying concern is instead not with the publication of shoddy work but the publication of work by authors (LLMs) who don’t, in some sense, *deserve* inclusion in a journal. But what other than some kind of anthropocentrism could motivate that? If the goal of a journal is simply to curate and distribute good scholarly work, as it should be, then facts about an author’s biography, so to speak, ought not to factor into the decision to publish or reject their work.

Anyhow, I just find myself somewhat puzzled by what seems to me to be a knee-jerk reaction to the thought of decent work being produced by an LLM. Am I just meant to feel creeped out by this possibility and not engage in further reflection on the matter?

The stipulation in the post above is that authorship is falsely claimed by a submitter, which implies that the submission does not feature an appropriate indication of the contribution of AI. This is largely independent of the question whether AI-generated research should or should not be published at all (which is a valid question, to be sure). And I can imagine sensible ways of indicating that AI contributed to an article, such listing an LLM as a (co-)author or citing it as a source together with the prompt used to generate the text.

I want to emphasise that claims of authorship are important. For one, publications are the way of judging the academic quality of someone’s work. As such, they play a large role in the decision making process for jobs, promotions, etc. Such judgments assume that the papers considered are the work of the person who claims authorship for them. Journals play, at least implicitly, a crucial role in legitimising papers as works of their authors. Given the stakes, they should carefully consider the methods they employ.

I think there might be two different types of concerns here. One is that authors use AI to generate the non-creative (for lack of a better term) part of a text: introduction, literature review, conclusion, and maybe some other bits of text here and there. If an author uses an AI to write these parts but still writes the creative part of the text (the genuine contribution part) on their own, I don’t think it’s such a big deal that the paper is published and the author gets all the credit.

The other concern is that authors use AI for the creative part and take credit for that. I agree that authorship is important in these cases and that no one should get credit for a genuine contribution written by an AI. But this scenario, in my view, is far fetched, even now with recent LLM progress. LLMs are very impressive in their command of natural language and ability to summarize lots of information. But we have seen very little evidence that they are capable of creative ideas at the level of academic contribution.

There is also the issue of authorial responsibility. Certainly in the sciences, an author is expected to answer questions, etc., if concerns arise (and they soemtimes do). This, I believe, is one reason why we are reticient to allow AI to be an author (or even a co-author). AI cannot be held accountable, as we can and do hold scientists accountable.

If a seasoned professor lists their lab assistants as authors, the onus of responsibility falls on the professor, not the lab assistants. The assistants may be allowed to field questions, but that is at the discretion of the primary author. I see no reason that AI-assisted writing would be any different.

That is not true. I have published on retraction in science. In retraction notices others besides the PI are blamed for the misconduct … manipulated images, or fabricated data. And the PI often does not write (or sign) the retraction notice alone. The basic understanding is that every (human) contributor/author is to be held accountable.

Alright, I’ll concede that. AI should not be listed as an author because it cannot be held accountable. On the other hand, that case only comes up if the questions that the PI has the primary responsibility of fielding bring malfeasance to light.

Further, if you (rightly) want “every (human) author to be held accountable” but you object because “AI cannot be held accountable,” then you must also hold that AI is human. I cannot agree there.

AI is a tool. If a study requires the use of a machine and the machine malfunctions or has the proverbial sand in the gears, we do not blame the machine. We blame the operator. There is no more need for an AI to sign a retraction (should one become necessary) than there is a pencil, computer, or MRI to do so.

Sorry. You must have misunderstood me. I do not think AI is human … we agree there

Then how can you say that AI must be held responsible for the contents of a paper for which it is used as a tool when we do so for no other non-human entity?

I meant AI cannot be held responsible… it lacks the requisite qualities. Sorry I was not more clear about this.

Faculty members at fancy schools at least used to commonly have secretaries or graduate assistants do things for them like fill out their citations. I don’t remember journals objecting to that kind of ministerial work being performed by non-authors. It’s interesting to me that use of AI for that sort of help by those without access to free (to them) work is now causing such a kerfluffle.

If bots can actually write entire papers that have seemed good enough to referees to get into reputable journals, then I agree with the comments above that it might be time to hang up the idea of “professional” philosophy publications. After all, people can’t beat computers at chess or Jeopardy anymore either. Do we want to understand the world or is it more important to get famous?

And yet people still play chess professionally.

Sure. What’s the harm in it? People continued to have foot races after the invention of bicycles and cars. I see no reason why people shouldn’t continue to write philosophy papers–even if the time comes when machines are better at it. But if and when that time should come, those interested in learning important philosophical truths about the world might have reason to look elsewhere than at human-written material.

Kind of sad, but c’est la vie.

The practice of using uncredited labour is, IMO, wrong.

Having someone else fill in your citations seems pretty flaming irresponsible to me, too. The author is the one with access to the original information, and putting it into a normal format as you write is not flaming hard. Just do it. If you need someone to fix it for you after, fine. But having them plug it all in for you? Please. You’re an adult with a PhD.

I’ve never had access to that sort of assistance myself. I just note that journals have never seemed to care much about it.

I have long used computer software to do this for me. I maintain a BibTeX database with references I might cite, and rely on the software to correctly fill out the information, and download a style file to ensure that this is done in a way that fits the journals format. I occasionally do spot checks, but otherwise offload this labor entirely. Do you think this is “pretty flaming irresponsible”? After all, I have a PhD, so I should be able to spend a few hours doing this all by hand.

Or is the worry that you think this is menial labor that no person should hire some other person to do for them, because it is demeaning to the dignity of humanity?

What I have in mind is the practice of, e.g., quoting a chunk of Kant, inserting a footnote, and leaving it blank, or writing “Kant,” or “Kant, Critique of Judgement” and then having someone else chase it all down, making sure editions match, etc.In using cotation software, you keep track of the bibliographic information for the sources you use, so this isn’t what you’re doing.

As a responsible author, you may well think this is some kind of straw man. But having done some freelance academic copy editing, I can tell you that this kind of thing is _very_ common, particularly among the set of well-known philosophers with regular access to grant money.

I once encountered a chapter by a very well-known political philosopher that quoted tons but gave no citation information whatsoever, and likewise discussed lots of published work without quoting it, and gave no citation information (e.g. he’d write “Rawls argues that…” and that would be it). He expected someone–me–to chase it all down for him. I sent it back untouched.

That is pretty flaming irresponsible.

TL;DR: Worrying about keeping AI out of scholarship is elitist, capitalist, and fruitless conservative sheep dip.

I think this is all currently moot. AI is not capable of writing anything that would pass peer review without the intervention of a human author at this time. That is, no AI can, from either a single prompt or a series of prompts write something that would pass as an academic article, book, or other publication. Therefore AI is simply a tool by which scholars are able to produce their own work.

I see in comments below that people worry about “just anybody” being able to submit an AI-written article. We’ll “just anybody” can submit one they wrote themselves. It is simply a matter of finding the time to write one. But the system (writ large) is designed to keep academia in an elitist position where it’s not something “just anyone” can do. I say if Joe the plumber wants to write an academic article, let him submit it and let it be judged double-blind for it’s merits.

“But removing the elitism and allowing AI-generated work will create a deluge of papers and the system will colapse!” Let it, say I. Scholars get very little recompense for their work while publishers swim in profits like Scrooge McDuck. And a large part of those profits come from institutions to which scholars must be affiliated to get reasonably priced (for the scholar) access to the works of others for them to study and refer back to. All for the sake of building wealth. That’s right. I said it. Affiliated scholarship is for the sake of building wealth, not the generation of knowledge. Perhaps it had been for the sake of the later once, but no more. So much for collegiate.

In short, we should focus less on keeping AI-geberated works out of scholarship and more into how scholars can use it successfully. I think, for instance of the movie Finding Forester. In this movie Sean Connery’s character mentors a young black author. One of the assignments he sets is to copy the first paragraph of another work and then see where his own thoughts take him. The young man uses this tool poorly and is accused of plagiarism. However, the tool, if it had been used properly, would have been a useful one. Such is AI.

It’s ridiculous how editors rely on unreliable detectors such as GPTzero, which has a real risk of discriminating against certain groups of academics (non-native speakers, neuro divergent people, etc.). Especially when the alternative is so incredibly simple: just arrange a 30 minute videocall with the authors of papers you suspect are AI-generated. Let them talk about their research, see if they understand what they submitted.

It wouldn’t cost much time at all to just *talk* to people and treat them with human dignity. Rather than hand them over to an algorithm for opaque judgment.

You can’t be serious. This couldn’t possibly scale, and even for just a few cases, it isn’t worth it. Journals have a surfeit of excellent, human-written submissions.

I read hundreds of robot essays a year. It’s incredibly alienating and depressing to be interacting with a robot who doesn’t care about content or feedback, rather than students. And, indeed, with students who are constantly lying to me.

I enjoy refereeing, because it makes me part of a disciplinary conversation, typically with people I know. But if I start to see lots of AI work, that will suck the joy out of it and I will simply stop refereeing. I already do more than my fair share of conversing with robots for work. I refuse to do more.

One other option might be to use editorial discretion and require some submissions to complete an additional form providing more information on if/where/how AI was used for an article. This could effectively serve as a “recaptcha” style check that requires authors to affirm their contributions in writing. If the worry is really people submitting wholly AI-generated texts with no genuine effort at scholarship, I suspect they are likely to just try a different journal instead. More broadly, many scientific journals use the “desk reject” option quite liberally (for better or worse), and this is based on the editor’s best judgment of whether the submission is worthy of peer review.

I don’t think I understand the proposal, Bsterner. Why wouldn’t the people who use AI extensively in writing their papers just deny that they have done so? What would they have to lose? Given how the technology works, it would be very difficult to prove definitively that any submission was created with AI if the author denied it. And as we can see even in this thread, if any group of editors did allege that a given submission was AI generated despite the submitter’s affirmation to the contrary, others will jump in to object that the best AI detection methods are more or less bogus, so that the accusation is groundless and hence unfair.

So why wouldn’t the people who use AI just lie about it, making the questionnaire a further unhelpful formality?

I’m assuming that the people submitting manuscripts using AI have different motivations. What I’ve proposed won’t help with people who have the energy and intelligence to lie well enough to get past peer review. However, I suspect there’s a much larger population of people who are (or will soon start) spamming journals. Some of these individuals want to look good with lots of publications and citations without receiving serious scrutiny. See for example some recent work by the publishing with integrity group (https://x.com/fake_journals/status/1802151185480307132). Others are likely just spamming in case they get lucky. The idea behind my suggestion was to find ways to put small hurdles in the way of these latter two groups that give the perception of heightened scrutiny/accountability and effort.

Okay, but doesn’t this perception of heightened scrutiny or accountability depend on a serious prospect of the status-seekers and spammers getting exposed and taking a reputational hit for making the attempt? And how is this supposed to happen? That’s what I don’t get.

Philosophers may wish to learn more about how AI is changing mathematics and science. Here are just three (of many, many) examples:

Mathematics:

https://www.quantamagazine.org/deepmind-machine-learning-becomes-a-mathematical-collaborator-20220215/

Cosmology:

https://www.quantamagazine.org/ai-starts-to-sift-through-string-theorys-near-endless-possibilities-20240423/

Biology:

https://www.quantamagazine.org/how-ai-revolutionized-protein-science-but-didnt-end-it-20240626/

Sure, but… Those aren’t large language models, are they? And that’s what we’re talking about using here.

In mathematics they definitely *want* to be using large language models to help translate automated formal proofs into human-readable forms. If we think that it’s good for the work to be produced, then we should want more ways to do this work more effectively.

The OP talks about “AI generated content”, not LLMs. Even so, there’s no reason to think philosophers would only use LLMs, nor to think that other disciplines can’t use LLMs. Moreover, the underlying architectures of all these AIs are very similar. And philosophers are more likely to be interested in AIs that do reasoning, perhaps in symbolic non-natural language forms. So the problems associated with AI use generalize across fields.