New “Meta-Ranking” of Philosophy Journals

A new article in Synthese presents two new rankings of philosophy journals—a survey ranking and a composite of several existing rankings—and discusses their strengths and weaknesses.

Emmanuelle Moureaux, “Forest of Numbers”

The paper, “Ranking philosophy journals: a meta-ranking and a new survey ranking,” is by Boudewijn de Bruin (Groningen, Gothenburg).

While philosophers seem to care a lot about rankings, de Bruin says, we could stand to pay more attention to how these rankings are determined:

We think a lot about our own field—more, perhaps, than people working in other academic disciplines. This may be unsurprising, because philosophy, unlike many other fields, has an immense array of tools that facilitate such thinking. But when it comes to journal rankings, we are far less self-reflective than most other scholars. In many fields, journal rankings are published in the best journals, and are continuously evaluated, criticized, revised, and regularly updated. Philosophy, by contrast, has no ranking rigorously developed on the basis of up-to-date bibliometric and scientometric conventions.

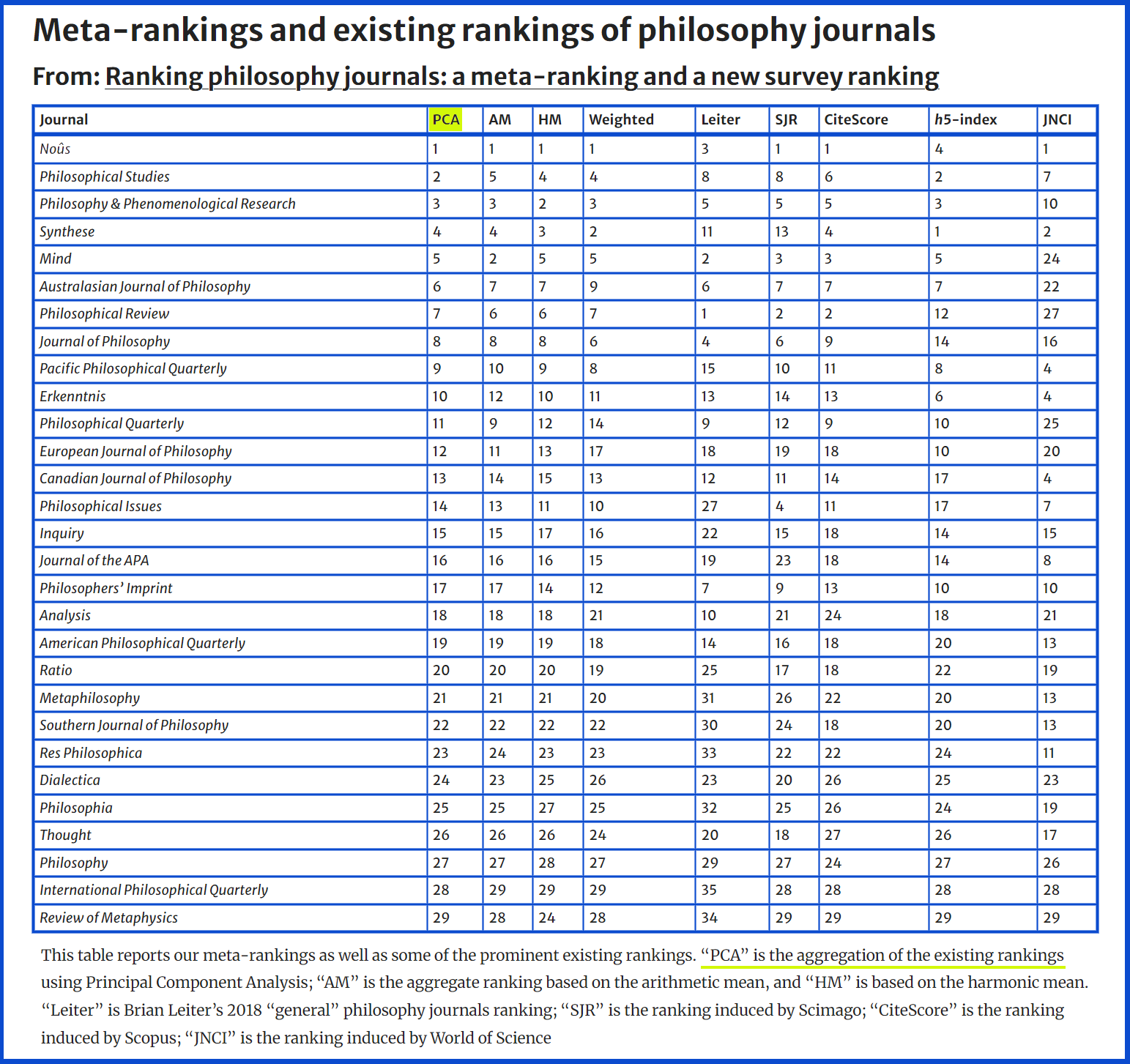

To address this, de Bruin compiled a meta-ranking of philosophy journals based on survey data collected by Brian Leiter (“general” philosophy journals, 2018), Scopus and Scimago (2019), Google Scholar (collected using Publish or Perish, data collection in 2021), Google Scholar (2019), and Web of Science (2019).

Here they are:

[from Boudewijn de Bruin, “Ranking philosophy journals: a meta-ranking and a new survey ranking”]

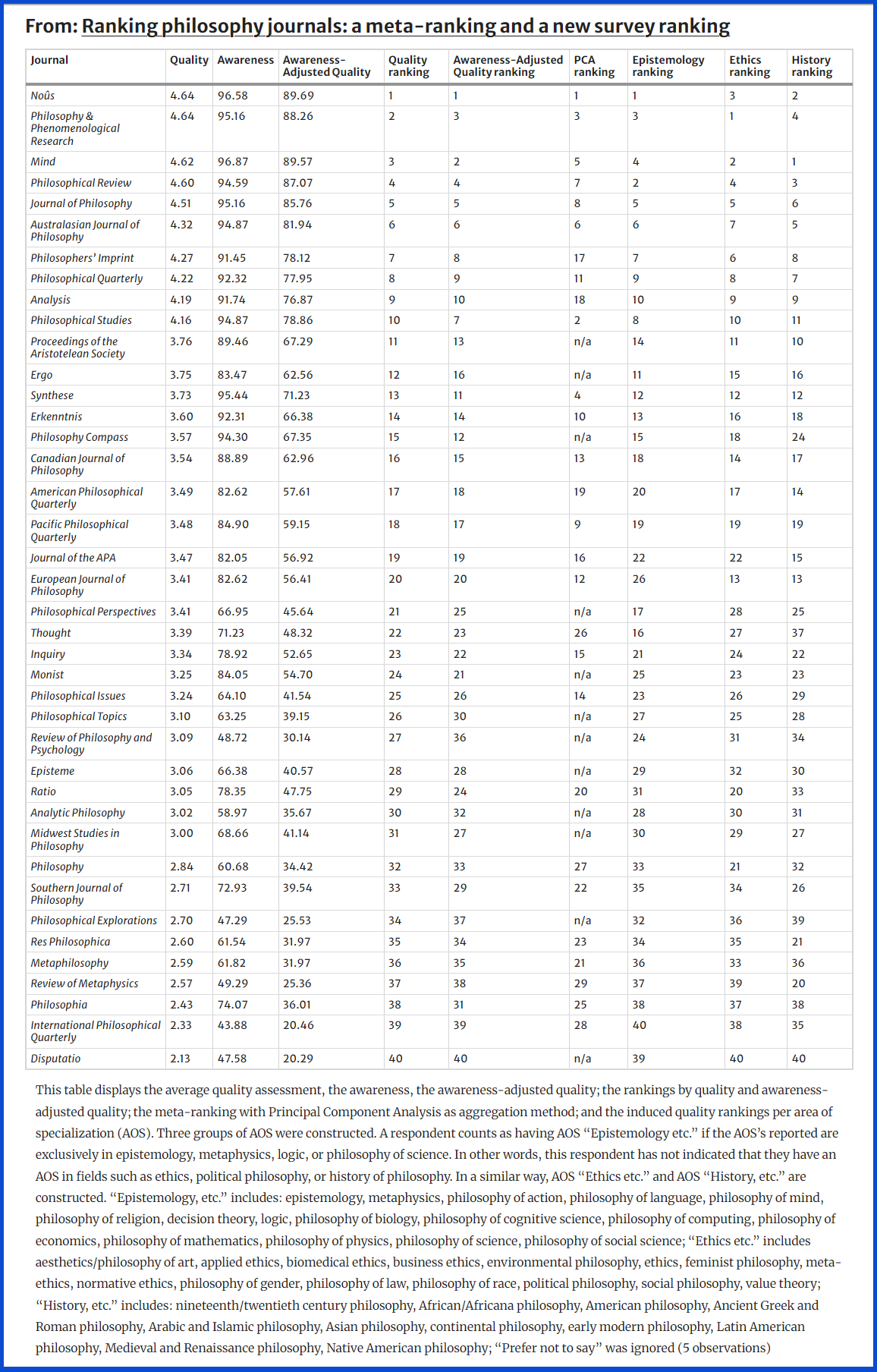

The survey started with a brief explanation. Participants were informed that they were asked to rate journals on a scale from 1 (“low quality”) to 5 (“high quality”), and that we were interested in their “personal assessment of the journal’s quality,” and not in their assessment of “the journal’s reputation in the philosophy community.” Furthermore, it was stated that if a participant is “insufficiently familiar with the journal to assess its quality,” they should select the sixth option, “Unfamiliar with journal.” It was also made clear that the survey was fully anonymous, and that data will be retrieved, stored, processed, and analyzed in conformance with all applicable rules and regulations, and that participants could voluntarily cease cooperation at any stage. Then respondents were asked to assess the quality of all journals. The journals appeared in random order, which is the received strategy to control for decreasing interest among participants and for fatigue bias. Subsequently, participants were asked to provide information about their affiliation with journals (editorial board member, reviewer/referee, author), and a number of demographic questions were asked (gender, age, ethnicity/race, country of residence, area of specialization, number of refereed publications, etc.). An open question with space for comments concluded the survey.

Here are the results of de Bruin’s own survey:

[from Boudewijn de Bruin, “Ranking philosophy journals: a meta-ranking and a new survey ranking”]

“If we consider not the mere position in the ranking, but the absolute values of the average quality that respondents assign to the individual journals, we see that there is not a lot of variation between the perceived quality of the top 10 journals.”

“The journals that respondents had to rank do not represent the whole spectrum of philosophy journals.”

“There is no indication that the perception of journal quality depends on gender, ethnicity, or country of origin.”

And about the two kinds of ranking:

“Our meta-ranking, rather than our survey-based ranking, may prove most relevant to the philosophical community…. [A] meta-ranking is much less prone to be influenced by bias.”

“Philosophy is highly diverse when it comes to methods and traditions, but methods and traditions are unevenly distributed across journals.”

“Further meta-rankings should be developed for a much wider range of journals and subfields of philosophy, and surveys should cover a much larger variety of philosophers from subdisciplines (and be more diverse on other relevant dimensions just as well).”

de Bruin also discusses limitations and criticisms of the rankings, as well as guidance and cautions regarding the use of rankings generally. The full paper is here.

(Thanks to several readers for letting me know about de Bruin’s article.)

Rankings are, by and large, a neoliberal con.

I love how the “progressive” position these days across the board is: “standards of quality are bad.” Definitely a winning strategy, comrade!

Rankings and standards of quality aren’t the same thing, hth

It is a shame ignorant comments like this are celebrated. Anyone who makes such an obvious strawman, and anyone who gives it a thumbs-up, does not meet the minimum standard of quality of critical thinking to be taken seriously to speak of standards of quality. No one mentioned anything about being ‘progressive’. No one mentioned anything about ‘winning strategy’ or comradery. The position you mention exists only in your imagination. If you cared about preserving standards of quality, you’d reflect on the low standard of quality of your own thinking and try to do better, but you and I both know you won’t because this has nothing to do with protecting standards of quality. It is about maintaining a status quo that allows people like you to live the delusion that they are intelligent critical thinkers despite not having the skills learned in the early days of an intro-level informal logic class.

Sorry I speak for the silent majority of critical thinking dropouts in our profession.

I mean, he did call rankings a “neoliberal con.”

“You’ve been with all the professors / And they all like your looks / With great lawyers you’ve discussed lepers and crooks / You’ve been through all of F. Scott Fitzgerald’s books / You’re very well read, it’s well known / You know what is happening, but you don’t know what it is / Do you, Mister Jones?” –seems to apply here

Uhoh. Someone is going to receive an email of appreciation from a third-world country aspiring student this week. The email will tell us that the third-world child was lost before they saw the ranking. But then the ranking came, and they learned how to navigate the first-world (which has been the first world continuously since the rankings began).

As someone from a third world country, I don’t appreciate the way you downplay the validity of our experience.

“Our meta-ranking, rather than our survey-based ranking, may prove most relevant to the philosophical community…. [A] meta-ranking is much less prone to be influenced by bias.”

Relevant for what purpose, though?

Admittedly, it is not quite clear to me what journal rankings are for at all. But I do know what I and many others in my position use them for, exclusively: where do I send my stuff to most impress hiring and tenure committees? Tenured folk may have similar concerns about peer evaluation, I take it.

To answer such questions, a methodology that is essentially vibes-based (such as Leiter’s or de Bruin’s own survey) seems to be most apt. I can’t imagine any other kind of data that could convince any such committee to value Synthese over PhilReview.

The current, biased rankings are relevant to the community, because many in the community want to know what the biases of the community are.

So perhaps de Bruin has a different purpose in mind? Or perhaps his point is a normative one: we *ought* to be paying attention to such meta-rankings rather than the vibes-based survey rankings. Rather than tracking biases, we ought to reduce biases.

If so, this goal would be complicated by the fact that Leiter’s survey equally much tracks as reinforces existing biases. The only way out may be a profession-wide agreement to drop vibes-based rankings. The danger of this move would be that biases remain but information about them disappears, so knowing the vibes becomes again accessible only to those sufficiently embedded in the higher echelons of the profession.

My takeaway was that the vibes-based surveys are more useful for e.g. judging where you should send your papers if you want to impress hiring/promotion committees. But rankings like the metaranking are a useful indication of how much a journal is doing for the profession. Thought of that way I think the ranking we get from the metaranking makes a lot of sense. Like, Phil Review may publish the highest quality articles. But if it suddenly just closed down this wouldn’t be as impactful for the profession as if e.g. Nous, Phil Studies, or Synthese suddenly stopped publishing things.

That’s a good way to put it, but I’m not sure these kinds of metric are going to capture service to the discipline.

A huge part of what Phil Review does is publish book reviews. Mind is somewhat similar. And I think they are useful – maybe that’s wrong, but it seems to me that they are. But they never get citations. A book review with 10 web of science citations would basically be a unicorn. I think that’s how you can get what look like absurd results like a measure of journals that puts

Book symposia, like PPR runs a lot of, do a little better, but still get very few citations.

So if most of your publications are book reviews or book symposia, you’ll do badly on citation-based metrics, so badly that Mind and Phil Review somehow do worse on some metric than Dialectica. But such a journal could still be very helpful.

I remember answering the questionnaire and thinking, good, this is not about my perception of reputation but about my actual experiences with these journals as a reader, author or reviewer. But I’m afraid many still gave answers despite very little direct familiarity with a given journal.

Is that a magic eye image?

I think we should cut to the chase and start ranking people in the departments, both within their departments (particular ranking) and overall within the profession. Once done we can then run a really good fantasy league based on annual output of articles weighed against the meta ranking of journals in which they appear (say Phil Revieww is 10 points, Philosophia 2, Derrida today -7, and so on. The fantasy league winner gets a private dinner with highest ranked philosopher at the Pacific APA, their autographed photographs and an annual subscription to the Journal of controversial Ideas.

Weirdly, in economics there are some individual rankings.

https://ideas.repec.org/top/top.person.all.html

One can also run google scholar for philosophers based on citations, though I guess it wouldn’t be as good. But I was thinking more of meta-ranking combining reputational ranking by peers, with placement of advisees and a climate/diversity survey by current students, so the ranking would be like chameleon eyes, forward/backward and sideways looking.