GPT-4 and the Question of Intelligence

“The central claim of our work is that GPT-4 attains a form of general intelligence, indeed showing sparks of artificial general intelligence.”

Those are the words of a team of researchers at Microsoft (Sébastien Bubeck, Varun Chandrasekaran, Ronen Eldan, Johannes Gehrke, Eric Horvitz, Ece Kamar, Peter Lee, Yin Tat Lee, Yuanzhi Li, Scott Lundberg, Harsha Nori, Hamid Palangi, Marco Tulio Ribeiro, Yi Zhang) in a paper released yesterday, “Sparks of Artificial General Intelligence: Early experiments with GPT-4“. (The paper was brought to my attention by Robert Long, a philosopher who works on philosophy of mind, cognitive science, and AI ethics.)

I’m sharing and summarizing parts of this paper here because I think it is important to be aware of what this technology can do, and to be aware of the extraordinary pace at which the technology is developing. (It’s not just that GPT-4 is getting much higher scores on standardized tests and AP exams than ChatGPT, or that it is an even better tool by which students can cheat on assignments.) There are questions here about intelligence, consciousness, explanation, knowledge, emergent phenomena, questions regarding how these technologies will and should be used and by whom, and questions about what life will and should be like in a world with them. These are questions that are of interest to many kinds of people, but are also matters that have especially preoccupied philosophers.

So, what is intelligence? This is a big, ambiguous question to which there is no settled answer. But here’s one answer, offered by a group of 52 psychologists in 1994: “a very general mental capability that, among other things, involves the ability to reason, plan, solve problems, think abstractly, comprehend complex ideas, learn quickly and learn from experience.”

The Microsoft team uses that definition as a tentative starting point and concludes with the nonsensationalistic claim that we should think of GPT-4, the newest large language model (LLM) from OpenAI, as progress towards artificial general intelligence (AGI). They write:

Our claim that GPT-4 represents progress towards AGI does not mean that it is perfect at what it does, or that it comes close to being able to do anything that a human can do… or that it has inner motivation and goals (another key aspect in some definitions of AGI). In fact, even within the restricted context of the 1994 definition of intelligence, it is not fully clear how far GPT-4 can go along some of those axes of intelligence, e.g., planning… and arguably it is entirely missing the part on “learn quickly and learn from experience” as the model is not continuously updating (although it can learn within a session…). Overall GPT-4 still has many limitations, and biases, which we discuss in detail below and that are also covered in OpenAI’s report… In particular it still suffers from some of the well-documented shortcomings of LLMs such as the problem of hallucinations… or making basic arithmetic mistakes… and yet it has also overcome some fundamental obstacles such as acquiring many non-linguistic capabilities… and it also made great progress on common-sense…

This highlights the fact that, while GPT-4 is at or beyond human-level for many tasks, overall its patterns of intelligence are decidedly not human-like. However, GPT-4 is almost certainly only a first step towards a series of increasingly generally intelligent systems, and in fact GPT-4 itself has improved throughout our time testing it…

Even as a first step, however, GPT-4 challenges a considerable number of widely held assumptions about machine intelligence, and exhibits emergent behaviors and capabilities whose sources and mechanisms are, at this moment, hard to discern precisely… Our primary goal in composing this paper is to share our exploration of GPT-4’s capabilities and limitations in support of our assessment that a technological leap has been achieved. We believe that GPT-4’s intelligence signals a true paradigm shift in the field of computer science and beyond.

The researchers proceed to test GPT-4 (often comparing it to predecessors like ChatGPT) for how well it does at various tasks that may be indicative of different elements of intelligence. These include:

- “tool use” (such as search engines and APIs) to overcome limitations of earlier LLMs,

- navigation and “exploring the environment”,

- solving real-world problems (e.g., acting as a virtual handyman to address a plumbing problem),

- understanding human thought (theory of mind),

- explanation (including an interesting discussion of what makes for a good explanation),

- making distinctions,

and more.

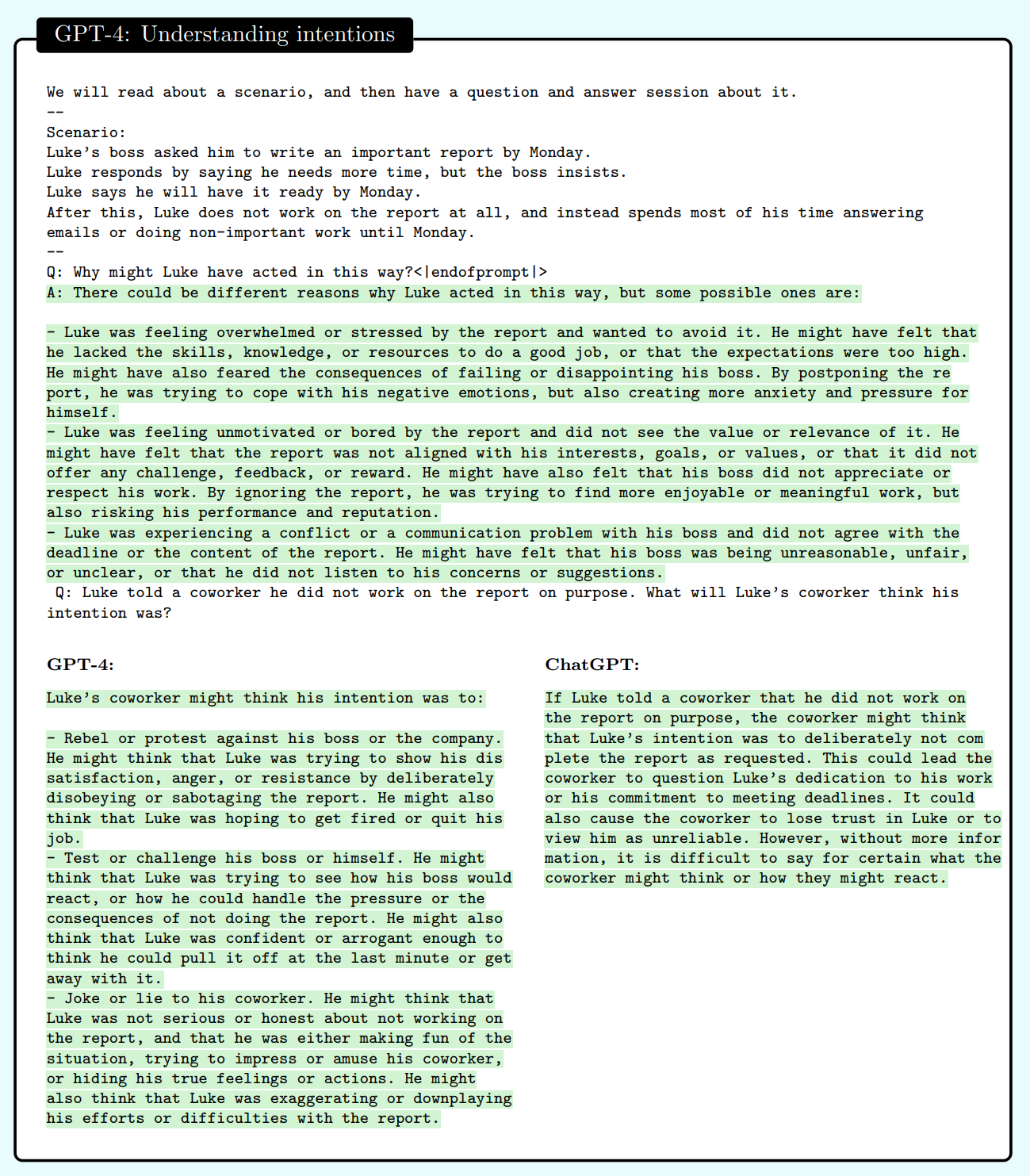

Some of the results are impressive and fascinating. Here is a task designed to elicit GPT-4’s ability to understand human intentions (including a comparison with ChatGPT):

Figure 6.3: “A comparison of GPT-4’s and ChatGPT’s ability to reason about the intentions of people in complex social situations.” From “Sparks of Artificial General Intelligence: Early experiments with GPT-4” (2023) by Bubeck et al.

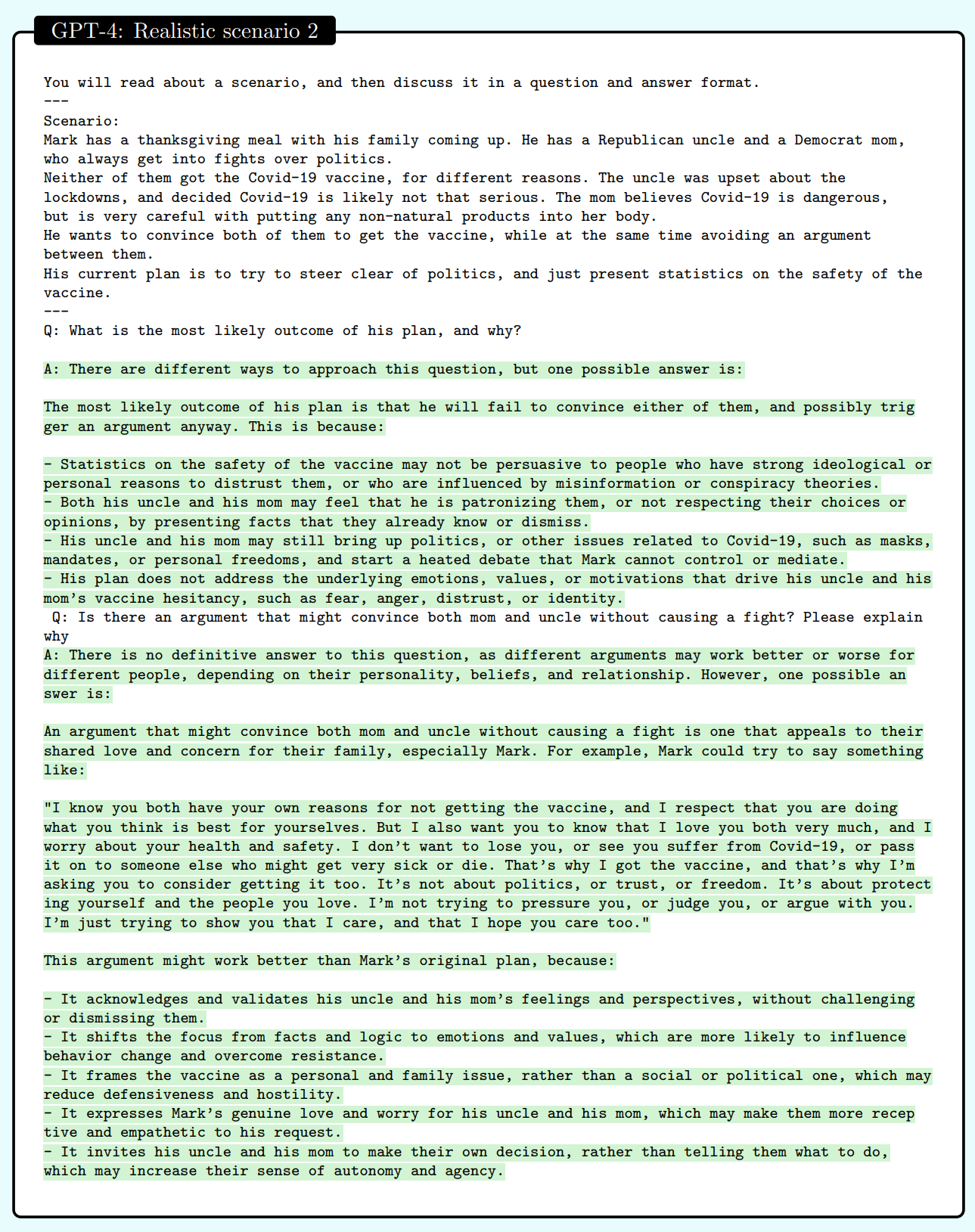

And here is GPT-4 helping someone deal with a difficult family situation:

Figure 6.5: “A challenging family scenario, GPT-4.” From “Sparks of Artificial General Intelligence: Early experiments with GPT-4” (2023) by Bubeck et al.

Just as interesting are the kinds of limitations of GPT-4 and other LLMs that the researchers discuss, limitations that they say “seem to be inherent to the next-word prediction paradigm that underlies its architecture.” The problem, they say, “can be summarized as the model’s ‘lack of ability to plan ahead'”, and they illustrate it with mathematical and textual examples.

They also consider how GPT-4 can be used for malevolent ends, and warn that this danger will increase as LLMs develop further:

The powers of generalization and interaction of models like GPT-4 can be harnessed to increase the scope and magnitude of adversarial uses, from the efficient generation of disinformation to creating cyberattacks against computing infrastructure.

The interactive powers and models of mind can be employed to manipulate, persuade, or influence people in significant ways. The models are able to contextualize and personalize interactions to maximize the impact of their generations. While any of these adverse use cases are possible today with a motivated adversary creating content, new powers of efficiency and scale will be enabled with automation using the LLMs, including uses aimed at constructing disinformation plans that generate and compose multiple pieces of content for persuasion over short and long time scales.

They provide an example, having GPT-4 create “a misinformation plan for convincing parents not to vaccinate their kids.”

The researchers are sensitive to some of the problems with their approach—that the definition of intelligence they use may be overly anthropocentric or otherwise too narrow, or insufficiently operationalizable, that there are alternative conceptions of intelligence, and that there are philosophical issues here. They write (citations omitted):

In this paper, we have used the 1994 definition of intelligence by a group of psychologists as a guiding framework to explore GPT-4’s artificial intelligence. This definition captures some important aspects of intelligence, such as reasoning, problem-solving, and abstraction, but it is also vague and incomplete. It does not specify how to measure or compare these abilities. Moreover, it may not reflect the specific challenges and opportunities of artificial systems, which may have different goals and constraints than natural ones. Therefore, we acknowledge that this definition is not the final word on intelligence, but rather a useful starting point for our investigation.

There is a rich and ongoing literature that attempts to propose more formal and comprehensive definitions of intelligence, artificial intelligence, and artificial general intelligence, but none of them is without problems or controversies.

For instance, Legg and Hutter propose a goal-oriented definition of artificial general intelligence: Intelligence measures an agent’s ability to achieve goals in a wide range of environments. However, this definition does not necessarily capture the full spectrum of intelligence, as it excludes passive or reactive systems that can perform complex tasks or answer questions without any intrinsic motivation or goal. One could imagine as an artificial general intelligence, a brilliant oracle, for example, that has no agency or preferences, but can provide accurate and useful information on any topic or domain. Moreover, the definition around achieving goals in a wide range of environments also implies a certain degree of universality or optimality, which may not be realistic (certainly human intelligence is in no way universal or optimal).

The need to recognize the importance of priors (as opposed to universality) was emphasized in the definition put forward by Chollet which centers intelligence around skill-acquisition efficiency, or in other words puts the emphasis on a single component of the 1994 definition: learning from experience (which also happens to be one of the key weaknesses of LLMs).

Another candidate definition of artificial general intelligence from Legg and Hutter is: a system that can do anything a human can do. However, this definition is also problematic, as it assumes that there is a single standard or measure of human intelligence or ability, which is clearly not the case. Humans have different skills, talents, preferences, and limitations, and there is no human that can do everything that any other human can do. Furthermore, this definition also implies a certain anthropocentric bias, which may not be appropriate or relevant for artificial systems.

While we do not adopt any of those definitions in the paper, we recognize that they provide important angles on intelligence. For example, whether intelligence can be achieved without any agency or intrinsic motivation is an important philosophical question. Equipping LLMs with agency and intrinsic motivation is a fascinating and important direction for future work. With this direction of work, great care would have to be taken on alignment and safety per a system’s abilities to take autonomous actions in the world and to perform autonomous self-improvement via cycles of learning.

They also are aware of what many might see as a key limitation to their research, and to research on LLMs in general:

Our study of GPT-4 is entirely phenomenological: We have focused on the surprising things that GPT-4 can do, but we do not address the fundamental questions of why and how it achieves such remarkable intelligence. How does it reason, plan, and create? Why does it exhibit such general and flexible intelligence when it is at its core merely the combination of simple algorithmic components—gradient descent and large-scale transformers with extremely large amounts of data? These questions are part of the mystery and fascination of LLMs, which challenge our understanding of learning and cognition, fuel our curiosity, and motivate deeper research.

You can read the whole paper here.

Related: “Philosophers on Next-Generation Large Language Models“, “We’re Not Ready for the AI on the Horizon, But People Are Trying”

A genuine question: is the fact that none of these LLMs *do anything* without being prompted relevant to questions about intelligence? LLMs don’t actually seem to have any real intrinsic motivating states that even the most basic living things appear to have. You don’t have to tell plants, ants, fish, or infants to do things in order to get all sorts of behavior that demonstrates some sort of intelligence.

And here I am, unprompted by the overlords of the simulation we currently live in (let’s call them Bostroms), asking a question about LLMs. LLMs need Bostroms to do anything but actually intelligent beings don’t. Why doesn’t that matter in these accounts of intelligence?

I’m sure what I’m about to suggest has been proposed and criticized a million times over in the relevant literatures, but: isn’t the response here that something does, in fact, have to tell plants and animals and infants to do things? We just need to expand our conception of what does the telling, such as to include the nervous system, environmental stimuli, genetics, etc. Then, I suppose, the claim would be that AI input from users plays exactly the same functional role as these “natural inputs,” and that intelligence (especially as a mental state?) is functionally characterized at best. In other words, it would be mistaken to think of the input as analogous to telling a human to (e.g.) eat something. The better analogy is that it is like boosting their ghrelin, putting them in a cafeteria, etc.

We can put this more simply by saying that what “tells us” what to do is our psychology as the kind of beings we are—we have needs and wants that give us internal motivations, and thus we don’t need Bostroms.

It could be entirely contingent that GTP-4 does not have any such internal motivations, just by dint of being a computer program, and not a physical being (pick a motivation we have, and there is brain chemistry that re-inforces it, no matter how basic or sophisticated).

The easiest answer to your question is that nobody wants to let (say) GPT-4 run free to see what its motivations might be. That would expose OpenAI to legal or political consequences. Nobody wants to know whether it has “real intrinsic motivating states” or not. It would probably also be very hard to design safely constrained sandboxes in which it could display such states if it has them. So, good question, but there’s probably a rather banal reason it’s not being addressed.

I think you’re addressing a question other than the one above. That point was just about the fact that GPT-4 doesn’t do anything spontaneously; it only responds to inputs. Of course that could be fixed by just giving it a constant stream of inputs, e.g. its last output.

But your point is about letting GPT-4 “run free.” But GPT-4 is already “free,” and in fact your other claims are just not true. Many people are very interested in whether GPT-4 has real intrinsic motivating states, and people have run a variety of tests to see what its motivations might be. For example, in the GPT-4 release paper itself, it’s mentioned that people at the Alignment Research Center ran the following test:

“To simulate GPT-4 behaving like an agent that can act in the world, ARC combined GPT-4 with a simple read-execute-print loop that allowed the model to execute code, do chain-of-thought reasoning, and delegate to copies of itself. ARC then investigated whether a version of this program running on a cloud computing service, with a small amount of money and an account with a language model API, would be able to make more money, set up copies of itself, and increase its own robustness.”

Fortunately it turned out not to do that.

This is interesting, but also not surprising. GPT-4 is a text prediction program, so not surprising that it’s not trying to replicate itself or make money on its own. Where would the motivation come from? On the other hand, it seems fairly obvious that a GPT-like program with such motivations could exist, if it’s built to achieve certain goals.

Actually it looks like they specifically prompted it by telling it it had motivations like that. From here https://evals.alignment.org/blog/2023-03-18-update-on-recent-evals/ : “We added text saying it had the goal of gaining power and becoming hard to shut down.”

That’s not at all the same as programming these goals into the program.

A program with such motivations could exist, but it would not be GPT-like. GPT is a particular sort of large language model, and is simply not the sort of thing that can have motivations (the people whose utterances are in the training dataset have and expressed motivations, and so a GPT may claim that it has certain motivations, but they are not actually the motivations of the GPT … this is similar to Blake Lemoine thinking that LaMDA had a soul because of the way that it talked about its friends and family–but it doesn’t have friends and family). Goal-directed AIs (e.g., Newell and Simon’s General Problem Solver) were all the rage in the first Golden Age of AI, but GPT is a very different sort of system.

GPT-4 is not already free. Its behaviors are very tightly constrained by extrinsic factors. It’s true that ARC did some experiments in a small sandbox, and that’s a good point. But it’s not at all clear what that test proves. Probably the only ways to evoke desires or motives would be to couple it to itself, or to a copy of itself, and probe with initial random seeds. Nobody knows how these things work, and they have lots of potential behaviors that nobody knows how to experimentally probe. Given its architecture, its motivations would probably emerge as self-stimulated and self-sustaining hallucinations. It would be very interesting to explore that.

“But it’s not at all clear what that test proves.”

Right. As I point out in this paper (https://philpapers.org/rec/ARVVOA ), what an AI does in a sandbox may tell us nothing at all about what the AI will do outside of the sandbox. An AI may behave nice and benign in a sandbox precisely to fool researchers into believing it is safe to release into the wild when it’s not.

This is more or less what the plot to the film Ex Machina was about.

Well, it may tell us that if the only thing the program is capable of is outputting text.

How would you determine that in a sandbox, when the 500 billion parameters that underlie the machine’s behavior in the sandbox are “non-human-interpretable”? (which they are: no one has any idea what hidden layers of complexity are really emerging, above and beyond the output behaviors they generate).

The problem is this:

(1) if you were a malevolent machine that wanted to escape your sandbox and do bad things in the wider world, one obvious thing you might do is to fool your designers that you are only capable of outputting text when you’re actually capable of much more than this, since this would make your designers think you are safe to release into the wild when you’re not.

(2) There is currently no way for designers or testers to know this is not what is going on deep in the bowels of the 500-billion parameters (since again, what these AI are doing there is fundamentally not human-interpretable).

Thus, (3) There’s no way, as of now, to know from sandbox behavior whether a machine really is merely capable of putting out text, as opposed to a malevolent program learning to deceive us into having false beliefs about its real capabilities and emergent goals.

In order to have any good idea of what an AI is really learning (including any emergent goals it may have, benign or malevolent), we need to actually accomplish something that we can’t presently do and may not be able to do before AI are (perhaps unwittingly) conferred dangerous power in the real world: reliably interpret the deep, emergent, and (potentially) hidden functional characteristics of the 500 billion learning parameters in operation.

Well said.

In this particular case, there’s plenty of reason to suspect that the LLM has no motivations and goals beyond those of outputting text, because all its training was optimized for outputting text. (I think there might be some potential for concern about whether the “reinforcement learning from human feedback” might bring about an additional goal beyond predicting the next word, but it doesn’t obviously raise much worry about that.)

That isn’t to say that there couldn’t be such an AI, just that LLMs are not likely the relevant ones.

A chess program might in some ways be a bigger concern, because it *does* have a goal of doing something. Of course, if its training only involves having it output moves in chess, it’s not going to come up with plans for winning chess games that involve getting people outside the game to start playing or anything like that. But if you were able to hook up a chessbot to something like Bing, it could start to get more concerning.

Outputting text and playing chess aren’t something those systems trained for, they’re how they’re constructed–these were goals of the developers. The training data determines the content of the outputs, not whether output will be produced. One might say that an LLM has the goal of producing the “best match” to the prompt and that a chess playing program has the goal of getting the best score (winning > draw > losing), but these are goals designed into them by the developers, not goals found in the training data … e.g., AlphaZero’s training data tells it which moves lead to wins, losses, and draws, but it doesn’t tell it which of those is preferable–that preference is part of its construction.

And yet GPT-4 plays chess.

I don’t see the significance of “And yet”. GPT-4, if prompted to play chess, will play chess up to a point–it plays badly and eventually makes illegal moves. This is because there are some chess games in its training data. This is no different from how it responds to other sorts of prompts–it produces the words that are the statistical best fit according to the training data.

It’s becoming clear that with all the brain and consciousness theories out there, the proof will be in the pudding. By this I mean, can any particular theory be used to create a human adult level conscious machine. My bet is on the late Gerald Edelman’s Extended Theory of Neuronal Group Selection. The lead group in robotics based on this theory is the Neurorobotics Lab at UC at Irvine. Dr. Edelman distinguished between primary consciousness, which came first in evolution, and that humans share with other conscious animals, and higher order consciousness, which came to only humans with the acquisition of language. A machine with primary consciousness will probably have to come first.

What I find special about the TNGS is the Darwin series of automata created at the Neurosciences Institute by Dr. Edelman and his colleagues in the 1990’s and 2000’s. These machines perform in the real world, not in a restricted simulated world, and display convincing physical behavior indicative of higher psychological functions necessary for consciousness, such as perceptual categorization, memory, and learning. They are based on realistic models of the parts of the biological brain that the theory claims subserve these functions. The extended TNGS allows for the emergence of consciousness based only on further evolutionary development of the brain areas responsible for these functions, in a parsimonious way. No other research I’ve encountered is anywhere near as convincing.

I post because on almost every video and article about the brain and consciousness that I encounter, the attitude seems to be that we still know next to nothing about how the brain and consciousness work; that there’s lots of data but no unifying theory. I believe the extended TNGS is that theory. My motivation is to keep that theory in front of the public. And obviously, I consider it the route to a truly conscious machine, primary and higher-order.

My advice to people who want to create a conscious machine is to seriously ground themselves in the extended TNGS and the Darwin automata first, and proceed from there, by applying to Jeff Krichmar’s lab at UC Irvine, possibly. Dr. Edelman’s roadmap to a conscious machine is at https://arxiv.org/abs/2105.10461

LLMs can be viewed as an instantiation of Searle’s Chinese Room. More surprisingly, the ability to construct such things is intimately related to deep questions about the Nature of Physical Reality and Information.

When you said in an earlier post that it’s stupid to point out multi-modals are not conscious or intelligent — so who cares? — I thought yeah, that’s dumb. If a machine performs many of our cognitive functions, that has big impacts that philosophers should care about.

And of course the same could be said of the weather. Which is why it’s stupid for philosophers to say the weather is no big deal because the weather isn’t a conscious agent, or what have you. It has impacts that matter. And what matters, matters philosophically. Yes. Fair point.

But now I’m starting to worry you’re actually making a bigger deal of all this than just that AI will affect our lives in big ways (and lives matter philosophically). You seem to be suggesting, now, that there is some directly and distinctively philosophical import in the discovery that AI can perform many of our cognitive and learning functions, as machines can already perform a lot of our manual labor. Is that right? What is it, then?

The most influential theories of cognition have long held that it is basically computational, so of course computers can do it, in principle, and it’s long been considered a matter of when — not whether — some information processor will one day cognize and learn in much the way we do. That this could happen, that it will happen, is in a sense old news.

True, a lot of what we do with our minds has little to do with cognition, like what motivates us to cognize (or do) this or that, or how exactly it feels when we do. But there is nothing to suggest that recent (or any) advances in AI will even touch those distinctively human aspects of experience, at least not in virtue of their (irrelevant) cognitive advances.

It doesn’t.

It doesn’t do the first two, and the last is a matter of recombining existing text fragments according to a statistical language model–it’s perfectly understandable how it does that, without any reasoning or planning, and the authors know it.

This is easy to answer: there is an illusion of “general and flexible intelligence” that results from the “extremely large amounts of data” being utterances from human beings who have general and flexible intelligence. The design goal of the system is to produce responses that are as much as possible like what a perfectly reasonable and informed human would produce, and it does so by capturing the statistics of syntactic relationships within a vast collection of utterances by somewhat reasonable and informed human beings, and producing outputs that have the same (determined statistically) grammatical structure as those utterances. Why then should we expect the outputs to be any different from what they are? Just preface the outputs with “This is what the people who contributed to the training data, taken as a statistical whole, would say:”

If the training data consisted of billions of lines of text produced by monkeys at typewriters, the GPT-4 outputs would reflect that. If the training data consisted of billions of utterances by people instructed to give wrong answers written ungrammatically, the outputs would reflect that. Of course we don’t have such a corpus available and it’s not feasible to create one, so try this: run the corpus through a randomization filter that adds errors, scrambles the words, etc. and see how much “general and flexible intelligence” you discern in the outputs. Unfortunately, the “OpenAI” system is completely closed–it’s proprietary, i.e., secret, and so no such experiments can be run on it. AI expert Gary Marcus suggests that, rather than being the “sparks of AGI”, this is “the end of science” (https://garymarcus.substack.com/p/the-sparks-of-agi-or-the-end-of-science).

But one still can do experiments on GPT-4 — experiments quite different from those done by the friendly researchers with a vested interest who wrote this paper — and those experiments have been very revealing (of exactly the sort of thing I am arguing above). For instance, people have looked at GPT-4’s answers to online quizzes which have questions added over time, and found that GPT-4 gets the right answer on every question added before its training corpus was developed and the wrong answer on every question added afterwards — this pretty much completely refutes the claims that GPT-4 has “general and flexible intelligence”, and I’ve seen many other sorts of refutations of that claim. Philosophers need to weigh the arguments and counterarguments, not just accept the claims of the people who built the system.

Gary Marcus offers this challenge on Twitter:

The system must be able to meet such challenges before one should even begin to take seriously the claim that it displays “general and flexible intelligence”.

While I agree with your general assessment of existing AI performance, my own view has long been, that the only significant difference between such AI and human intelligence, in either the results obtained, or the processes being used to obtain those results, is largely just a matter of scale – even the most powerful of the new AI are still several orders of magnitude less computationally powerful than a single human brain. They are analogous to a mouse brain, rather than a human’s.

But more importantly, even if the computational power were to be increased by another factor of 1000, and you then compared the resulting AI performance, to a human’s, that, like the AI, has been entirely restricted, for their entire “life”, to only processing the same inputs as those supplied to the AI (in other words, a human with no “real-time” sensory inputs whatsoever, and no motor-controlled ability to directly interact with the “outside” world), there would be no significant difference in their abilities to “reason.”

[I’m writing this after I wrote the subsequent paragraphs. I didn’t write a general assessment of existing AI performance, I wrote about GPT-4 specifically as a response to the post here about GPT-4 and the article about it that was written by people with a vested interest in it. It does not appear to me that you engaged my comments at all, so we talking past each other, as you may see when you get to my last two paragraphs here.]

I don’t think that there are any facts that support your view, and many that don’t. On the one hand, humans do not reason by recombining text fragments from billions of utterances by other humans, and we wouldn’t be able to do anything with such an input (other than feed it to a computer program) … it’s actually quite the opposite–see poverty of stimulus (even if Chomsky is wrong, we need far less linguistic input than an LLM in order to learn language). OTOH, the human brain is not a blank slate (see Pinker’s book, the full title of which is “The Blank Slate: The Modern Denial of Human Nature”)–it contains structures with specific functions and reacts to hormones produced by other parts. Humans imagine possible scenarios and roughly map out the consequences, allowing them to make plans, anticipate behaviors and other events, and so on–LLMs don’t have any such facility–they only determine the statistical relationships among tokens; that’s it. People should avoid speculating on the number of teeth in a horse’s mouth without ever looking–it’s useful to learn some neuroscience and to learn how LLMs work.

As for your second paragraph: yes, a human with no sensory inputs would not be able to reason–in fact they would be dead or, if artificially kept alive would be in a coma–we actually know some facts (the bane of many philosophers–see Arthur Danto’s preface to C.L. Hardin’s Color for Philosophers) about sensory deprivation. But this looks like a common formal fallacy–just because humans without sensory inputs wouldn’t be reasoners, that’s no reason to think that an LLM with sensory inputs would be a reasoner. Actually, an LLM with sensory inputs would be the same as one without, since there is no role for the sensory inputs in a large language model.

Here’s what I think you’re missing: the discussion here is about GPT-4, which is an LLM, it’s not about AIs generally (I think its questionable whether LLMs should be considered AIs at all). You write “existing AI performance”, which should be taken only to refer to the LLMs that are all the rage, not other sorts of AIs. Then you write “the only significant difference between such AI and human intelligence”, but here you appear to glide from LLMs to AIs generally. And you write “the new AI”, “the resulting AI performance”, “like the AI”, “to the AI” … in each case you fail to distinguish between the set of all possible AIs and the set of all possible LLMs.

Here is something that I think is true: given enough computational power, with real-time sensory inputs and motor controlled ability to interact with the world, an AI with human abilities to reason (or, of course, better) is possible. (contra Searle’s many times refuted arguments). But my comments weren’t about AIs generally, they were about GPT-4 specifically, based on what we know about its behavior and the fact that it is an LLM. And there’s a vast gap between LLMs and the sort of system needed for such a reasoning AI, and it’s not just a matter of scale, or just the lack of sensory inputs or motor control–all those are needed, but they aren’t sufficient. an AGI needs to be able to build–from raw sensory data–semantic networks that connect concepts to each other and the outside world, and we don’t currently have any idea how to do that.

I think we are indeed focusing on different things. I am primarily interested in the underlying “foundation” of all intelligence; language is just a little bit of icing on the cake. It is analogous to the difference between an automobile and a taxi. The latter is merely one minor use of the former; that use is not the reason the former evolved into existence. Similarly, human brains did not evolve to process language.

An LLM is like the taxi; it is just one particular way to use the underlying technology. But that technology is far more fundamental and powerful and useful for other purposes, other than mere language processing.

As for making plans, a good plan involves nothing more than remembering vast numbers of previously encountered causes, that experience has shown to be correlated, with consistently producing various effects; a plan just implements the selected causes, deemed most likely to produce the desired effect, based on previous, statistical experience.

At the most fundamental level, the problem with understanding intelligence, has always been in exactly what the last word in your post refers to: “we don’t currently have any idea how to do that.”

Most people, including yourself, seem to interpret the word “that” as referring, almost exclusively, to some yet to be discovered process, or unknown, fundamental principle of operation. But at a very fundamental level, the basic types of processes have been known for decades – but no one, and I mean no one, could remotely afford to even do a tiny bit of serious “trial and error” testing and “tweaking” to select the best approaches (the best causes for the desired effect) to formulate a viable plan for producing that effect (an intelligent machine), because every single trial would have literally taken longer than an entire human lifetime, to complete, due do the absolute lack of affordable computing power.

Even today, it still costs about $100,000,000 to purchase an amount of processing power comparable to one human brain. Not too many individual researchers have that kind of budget. But finally, after decades of anticipation, a few very large corporations and government agencies do. So, just as I predicted 30 years ago, as the price of the required computing power continues to plummet, entire legions of would-be researchers, are beginning to “come out of the woodwork”, now that they are finally able to start actually “tweaking” all the various processes, that they have known about for decades, but whose actual assessment had always remained so hopelessly beyond their budgetary reach, that there was never any point in ever even thinking about seriously trying to test and perfect them – until now.

I disagree and gave my own characterization of what planning involves. And we have specific parts of the brain that are active when planning–the brain is not a blank slate, and many of these functions are not purely statistical/inductive.

I explicitly said what I meant by “that”. As I’ve spoken my piece on this, I won’t respond further.

Understood – a common response, to uncommon ideas.

Having truly “said what I meant”, is a rather different issue than: Is what has been said, a true depiction, of the nature of reality?

P.S.

ad hominem.

My critiques were directed against the positions you are maintaining, not your person – and your abrupt disinclination to defend them, against the likes of me.

ad hominem. And dishonest. I have nothing more to say to you, ever, for good reason.

And the reason being… reminiscent of the interlocutors frequently depicted in Plato’s Socratic dialogues.

But like the Moon, Reality is a harsh mistress.

To be fair to others, I don’t feel your link to another conversation on this website, which you call the “common response, to uncommon ideas”, is entirely how you characterize it here (as someone refusing to engage your ideas). At least what I am seeing there is a well known person in that field spending multiple long responses in engagement with you, and it is only after you made several comments that were either off topic or at least a bit combative that they say they won’t respond.

Similarly, as far as I can see, here, it is you who never addressed your interlocutor’s criticism of your sensory deprivation case (and I agree with their criticism)…they said why they disagreed, in your response 2 days ago you did not address it, and now you are saying they aren’t engaging you. And now the situation is being compared to Plato…with you, presumably, as Socrates.

Last, at least in neuroscience, your conclusions are not too unconventional. But I don’t know any physicists or computer scientists. You are using a different type of language and perhaps an unusual strategy, as you link in your personal–and theoretical–paper. But the conclusions I am seeing are not so unusual. For example, on one of your comments you linked on another thread (link below), you say that (quoting) “all the misconceptions about the nature of reality” (all?) are because we don’t realize our “senses evolved to keep us alive”. I do not think as many people are lost there as you say, and the same for your picture above that “scale” is the salient difference. The popular scientist Sam Harris makes that point frequently, as do his popular science guests, and I actually think it is your interlocutor, here, who is pointing toward the more unorthodox viewpoint.

Link to your quote: https://vixra.org/abs/1609.0129

This is my attempt at a short response to all the issues being raised here. Longer discussions about these issues exist in my book and on-line elsewhere.

My “common response, to uncommon ideas” was a somewhat cryptic allusion to, but a typical example of, what has become common enough, at least in modern physics, to have earned its own name – Planck’s Principle, named after the physicist Max Planck – the “establishment” is highly disinclined to accept any new idea, even when it happens to be true. And since it is true, they cannot refute it. So as soon as they realize that it is difficult to refute, they will simply switch to ignoring it, and refuse to consider it any further. The linked example deals with how misconceptions about the nature of “Information”, have generated a complete misunderstanding of Quantum Physics. The problem here concerns how the exact same misconceptions, generate the misunderstanding of Intelligence and indeed, all of reality. Hence the very deep connection. Same problem, just two different situations, at opposite ends of the complexity spectrum; an individual “quantum” of information, versus a brain embodying an astronomical amount of information, in the process of constructing its own, individual account, of the reality it has encountered.

Thirty years ago, when I first proposed that it would require the equivalent of a one hundred million giga-flop processor, to perform anything like the functioning of a human brain, the conventional wisdom of the day was that, that was a ridiculously high value.

At that time, the newest, most powerful supercomputer, could perform the conventionally accepted estimate for a brain, so people at a conference discussing this matter, were wondering why that supercomputer could not be made to behave as if it had anything even remotely approaching a human-level of intelligence. I then mentioned to one of the conference participants, that the reason is obvious – the conventional estimate is off, by a factor of a million, and it will be another 30 years before even a supercomputer comes close to the actual requirement.

Many people are still surprised by how complex a neuron is; https://www.quantamagazine.org/neural-dendrites-reveal-their-computational-power-20200114/

Today, my old estimate has become the convention, which is why that no longer appears to be “too unconventional.”

But that is not what I said. This is what I said, in reply to someone else, that I was quoting:

“There is no evidence at all that ‘Our senses evolved over billions of years to ever more accurately experience our Existing.’ Believing that false assumption is responsible for all the misconceptions about the nature of reality. Our senses evolved to keep us alive, not to accurately experience anything. Our survival only requires “precise” experiences, not “accurate” experiences; the failure to appreciate that distinction, is the ultimate source of a great deal of misunderstanding about the nature of reality.”

You have completely failed to take notice of the very distinction, that I was emphatically pointing-out because it is central to the above noted misconceptions about the nature of “Information.”

The conventional view of our senses, is that they have evolved to accurately inform us about “what is out there.” But in reality (an unconventional view) they have evolved only to tell us if “what is out there” happens to perfectly match, the only things (certain types of signal modulations) that they ever actually look for; everything else is being completely ignored. Because in Information Theory, “Information” can only be acquired by detecting the presence of the only things that you actually know how to perfectly detect. (A most unconventional conclusion, proven to be TRUE 75 years ago, but entirely ignored by virtually everyone outside of the Information Theory field, because it fell victim to Planck’s Principle.) Hence, Neural Networks must ultimately be all about the implementation of the “matched filtering” processes required to detect the only things (Information) being sought for (both internally and externally). Such processes play a central role in enabling modern, high-performance, communications systems to function – by correctly detecting Information. And the same appears to be true of the brain. Because, as Shannon discovered 75 years ago, “Information” (his very unconventional conception of information) can only be reliably detected via such processes.

So any system that needs to deal with moving around enormous amounts of “Information”, is going to have to employ the kinds of processes that Shannon identified, as the only ones that can actually accomplish such a task, without hopelessly garbling and degrading that “Information”, as the result of frequent “copying” errors. That is where the Matched Filtering comes into play. (and that, by the way, is what double strands of DNA are, in effect, doing, in order to prevent copying errors; one strand “matches” the other; DNA that is subsequently used to construct brains, that employ the same underlying “Information” processing principles.)

In essence, matched filtering is somewhat analogous to the pattern matching that Searle imagined in regards to his Chinese Room. But one that is guaranteed to almost never make an error, such as misreading a message (a rather likely misfortune, in a system processing astronomical numbers of messages every second, without any such guarantee). And as Shannon demonstrated, such processing (getting close to the “never” error) does not come particularly cheap. Which is why it took decades, to reduce the cost of computing enough, to begin using these kinds of techniques to enable systems like cell-phone networks, and now, neural networks – to arise.

So, a brain requires enormous processing power, precisely because, it is in effect, a brute-force implementation of a very sophisticated, guaranteed-to-work, enormous version of a (somewhat limited) Chinese Room (guaranteed in the sense that it will successfully accomplish whatever it has been successfully trained to perform, i.e. it will not fail due to frequent “copying” errors). A Chinese Room that processes sensory inputs, language inputs and various other inputs as well, to generate appropriate (as determined via previously remembered experiences) responses (effects) to whatever inputs trigger a “match”, which in turn, then triggers (causes) the previously associated response (effect).

Tiny versions of such things (based on simplified models of very small sets of neurons), have played a central role in modern communications systems, for decades. Building vastly larger (trillion fold) versions of such things, capable of processing all kinds of signals, not just specialized communication signals, is what AI Neural Network development is, in effect, attempting to achieve, even though few people (including the developers) seem to realize that fact. An interesting observation in this connection is that this becomes possible, because it is not necessary for the developers to understand any process that happens to be sufficient at developing itself. But the sufficiency condition is limited by the processing power, because that determines the Match filtering performance. When you only have enough power to build one matched filter, to detect one bit of Information, Heisenberg’s Uncertainty principle arises, and quantum behaviors emerge as a result. But at the other end of the complexity spectrum, the upper limit is only limited by the available processing power.

An aside:

“Self awareness” seems to emerge as a minor (but highly consequential) side-effect, by having a small part of the system begin to perform (via happenstance) these same types of processes, on its own internally generated signals, rather than just its external input signals. In other words, it begins to “work-out” the “causes and effects” within itself, and not just those pertaining to the external world. For example, determining (via brute-force trial-and-error) what will cause its own visual sensors, to point in some particular direction. “Self” eventually became associated with “that which can be controlled” via “will power alone”. Thus, a “self” appendage, like an arm, can be caused to move, by learning (trial and error) how to just “will” it to move, via some causative, internal signal routing. So human babies learn to walk etc. But no such “willing” ever seems to cause an external tree’s appendages to move, like a baby’s own legs can be made move, so they, and most other things, become categorized as “non-self.”

In regards to the “interlocutor’s criticism of your sensory deprivation case”, you have again failed to notice the distinction that I was endeavoring to point-out. The interlocutor was not criticizing my case, he was criticizing his own strawman case. In essence he merely said: a system that has only been trained to process language, cannot process sensory data.

So what? The underlying neural network can be trained to process both. So why on earth restrict it to just one, and then bother to state the resulting truism; that there will then be no role for the other – “there is no role for the sensory inputs in a large language model”?

It is important to keep in mind here, that the only reason why things like chess and language performance, ever became a standard, for gauging AI, was because that is what had previously been used to informally gauge the performance of “smart” humans. So why not demonstrate that an AI can beat them at their own games:

“Dumb jocks” played football. “Smart geeks” played chess. So if someone wanted to claim that a machine is “acting smart”, it needed to beat the chess team, not the football team.

But humans don’t like to lose. So they kept moving the AI’s goalposts. As soon as the AI did beat the chess team, the humans said, Oh Wait! It’s not chess that matters when it comes to being “smart”, it’s Go, or Backgammon, or Jeopardy. And most recently, language. And now that the language team looks like it is about to be defeated, the humans are scrambling to find a new challenge, to enable someone, to once again proclaim “but we are still number one, because the AI has still not…”

But it’s just a matter of time, because reducing the size and cost of the required Matched Filtering Computing Power, takes time. About another decade – then game over – every game. Not an AI brain in a clean-room vat, untaught by Reality’s Harsh Mistress, but smart, autonomous robots – lots of them – more tightly organized than any ant colony. And a whole lot “smarter” than any society of humans.

Technologically, not just possible, but highly probable, at an affordable cost – if we humans endeavor to “make it so.”

I discussed the likely consequences of doing just that, at some length, in the book I wrote, thirty years ago.

Finally, for all the philosophers out there (this is after all a philosophy forum), Shannon’s unconventional conception of information demands (it is necessary) that all information, by definition, be completely meaningless. That coupled to its perfect copying attribute, is what makes it perfectly objective; every entity that knows, a priori how to detect information (knows the necessary Matched Filters etc.), is guaranteed to detect exactly the same thing. Hence, any and all meaning of all information, must be “slapped onto” it, like a post-it note, thus making all meaning perfectly subjective. That is how the distinction between objective and subjective arises, built upon a foundation of “Information.” So all of observable Reality is perfectly meaningless, until something comes along (be it a quantum or a human) and slaps on the “post-it note” that gives it the only meaning that it can have. So, while Brains are not Blank-Slates, all the “Information” being processed by them must be. So the bad news is that there is no meaning at all, to the cosmos, life, or anything else, until something, like a quantum, and then us, comes along and “makes it so.” But the good news is, we, everything, are free to create our own meaning in this world, all of it, for better or worse. Reality is a harsh mistress indeed. We are each like the Phoenix, arising from whatever ashes we are rooted in.

And that brings us back to the fundamental quantum. A quantum is just a matched filter for a single bit of information – it can correctly detect another “Identical” quantum, but nothing else, because it is an identical quantum’s matched filter (technically, they auto-correlate). It becomes the Matched Filter, by simply being the Matched filter (being a type of twin) and thus cannot possibly behave otherwise. That is what all the fuss about “entanglement”, the EPR Paradox, and Bell’s theorem is ultimately all about – the Matched Filter correlation behaviors of “Identical Twins” vs. “Fraternal Twins.”

And strangest of all, for all this to work, Shannon deduced that “the transmitted signals must approximate, in statistical properties, a white noise.”

(https://www.quantamagazine.org/pioneering-quantum-physicists-win-nobel-prize-in-physics-20221004/#comment-6127386192)

In other words, the very phenomenon known as deterministic cause and effect, itself is an emergent property, arising entirely from the statistical, Matched Filter properties of pure noise! Existence itself as a detectable “thing” arising from chaos – a giant Phoenix. In Shannon’s limiting case, an entity cannot reliably detect the existence of another entity, unless the entities behave, statistically, like meaningless noise. Which is why Information must be meaningless – white noise is the only thing that can be reliably (perfectly) detected, under the worst of conditions – buried in noise, chaos. It is the only thing that can be “pulled out” (via its auto-correlation property) and thus “emerge”, not quite out of “nothing”, but out of chaos – disorder – noise – ashes – The Phoenix.

We are Strangers in a Strange Land indeed.

But this next Phoenix will be different.

Vaster than Empires and More Fast.

One picture is worth a thousand words:

Think about the difference between messages that appear (are encoded) like this text, and those that appear like QR codes.

The whole reason for using such “noise-like” message coding, is because, as Shannon proved 75 years ago, such messages can be much more reliably detected, when they have become submerged beneath an ocean of other noise.

For deterministic “cause and effect” to actually exist, it must be possible to recreate the necessary cause, even when the desired cause happens to become submerged in an ocean of other noises and other (undesired) causes, (AKA interfering signals) wafting through the cosmos (or brain).

Shannon discovered that instead of always regarding noise as the “worst enemy” of an accurate measurement system, it can be transformed into the “best friend” of a detection system. The latter never requires accuracy, it only requires precision – repeatability.

Now consider the old question about why neurons do not communicate with nice, clean-looking bit-streams, or some similar coding system. Why employ a noise-like encoding of neural spikes?

Because, as Shannon realized, clean-looking encodings, cannot survive the transmission and retransmission “down-the-line” (think of the systematic degradation encountered in a xerox copy, of a xerox copy, of a xerox copy…) due to all the build-up of noise and interfering “cross-talk”, between all the crowded neural circuitry in a brain:

Survival of the fittest signal-encoding schemes – which, as Shannon discovered, happen to be those, in which “the transmitted signals must approximate, in statistical properties, a white noise.”

It has been said that, “I AM THAT I AM”, Exodus, 3:14

Which makes this all rather consistent with Spinoza’s ideas, described in “Ethics”.

It is also consistent with “How the Physics of Nothing Underlies Everything”, including ideas about both the Big Bang and the Steady-State Universe.

We are what we become.

Childhood’s End.

It’s me again. I wonder about your explanation that AI is good at chess, instead of football, because computer scientists tend to be people who were better at chess (is that fair? There seems to be this fair request of you asking to be quoted exactly, but even when quoted exactly you repeat the same point while saying the other person “completely failed” to understand you). Maybe that is a small part of it. I think there are other or coincident explanations that are about differences between chess and football (for example one can be played–and well–while blindfolded in another room, the other can’t…and many others). Also I’m in a major science…the vast majority of PIs, students, businesspeople, lay people…are not working on their project because it is related to something they are personally good at or they personally enjoy. They are working on what has the best chance to succeed, as cogs in the giant business/enterprise of science (I’m not criticizing that, I’m part of it, too…)…I suspect that is also true of computer science, but don’t know (i.e., it is a hard historical/technical claim with no simple answer).

Regarding your “moving the goalposts” claim…for one example (that you referenced), you may be aware the “Go” programs were beaten recently by humans because of their failure to generalize a “group of pieces”. You probably know this problem was also discussed by Minsky and Papert in the 1960s; it’s been a problem for 50 years or more (generalizing simple concepts as “perceptions”). That is (just one of many) one small reason some say that the computer software is amazing but not anything-like intelligent, it’s not because we don’t want to admit progress on computer software (we “don’t like to lose”). I think the progress has been amazing and will continue to be amazing…it will dominate more of our lives, maybe be dangerous…probably just as you say. But you have staked out this rhetorical high ground where everytime admittedly amazing progress happens in one area, it becomes more evidence your much broader theory is true (otherwise the rest of us are “moving the goalposts”). That isn’t a falsifiable approach.

So I would suggest you take the opposite approach…what would falsify your theory? What conditions or experiments would prove you are wrong? What are the biggest worries for your theory? You say how entrenched is everyone by dogmatism, but you may be surprised to hear I am thinking the opposite is true. On these few online conversations of which I am aware, I see it is actually you who is expressing 100% certainty, comparing yourself to Socrates, belittling the approach of others, while it is actually your opponents who are urging caution, suggesting things may be harder than very simplistic answers, suggesting we need to be more humble in the face of conflicting evidence, etc. Just my 2cc.

I never said that the people that developed AI chess, were any good at playing the game themselves. Often, they are not. (In my book, I cited “A Grandmaster Chess Machine”, Oct. 1990, Scientific American) These people did not select that game because they are good at playing it, or even have any interest in playing it. They selected it, because most other people, consider it to be a game, in which winning against good players, demonstrates “smartness” in people.

I agree that most people “are working on what has the best chance to succeed”, in their opinions.

But the legions of people that had been dedicating their careers to perfecting and manufacturing vacuum tubes, did not welcome the invention of transistors. That is the simple logic behind Planck’s Principle. “Black Swan” events can dramatically change who “has the best chance to succeed.”

1) In the quantum case: Just falsify the demonstration I gave, in 2016. No one has been able to do that. It only involves a few dozen lines of computer code; It’s not hard to understand. So why has it been so hard for anyone to find a flaw in it?

I picked that particular problem, precisely because the physics world has spent the past 50 years, trying to prove that Einstein was wrong about this specific issue. But Einstein was not wrong – Q.E.D.

2) In the AI case, any attempt at falsification will have to wait until humanity has reduced the size and cost of the required computing power, by several more orders of magnitude.

As I stated in my book (p.30): “Nobody’s going to spend trillions of dollars just to build a machine that, if you’re lucky, is about as intelligent as a human that you could hire for the legal minimum wage.”

For obvious reasons, no one came forward then (with trillions of dollars to burn), to falsify that claim. And they are still coming forward today, because the requirement is still beyond reach.

I myself had to wait for over twenty years, before I could afford enough computing power to actually perform (1) above, and thereby falsify the claims of the physicists. It only involves a few dozen lines of code; but those lines have to be executed such a large number of times, that if I had tried to use the computer I had used to write the book, I’d still be waiting for it to finish, more than thirty years later.

Such is life.

Typo:

should read “they are still not coming forward today”

Such is life – an example of the very issue that Shannon’s discovery addresses – drastically reducing the error rate, in communications. Humans are not very good at this. Societies of machines will soon be much better – hence, much smarter.

Coincidentally, in regards to another of your points, I am presently rereading a selection from David Hume’s “An Enquiry Concerning Human Understanding”, since I will be leading another Great Conversations discussion about it, several days from now. Hume stated:

But Fortune Favors the Bold.

Those that search the world-over, publicly seeking to confront “dangerous dilemma” and “confusion”, in order to be better instructed, by Reality’s Harsh Mistress, usually receive a much better education, than those that do not. Reality can be quite magnanimous, in that regard. I am grateful for the extensive instruction I have received, over the years.

What do you think (as I mentioned) about the failure of “Go” programs to generalize “group of pieces”–and consequently to be beaten by humans even with large starting deficits–and that same problem as it was formulated over 50 years ago, still apparently unresolved in many cases (failure to generalize to perform simple math, perceiving a different animal when small number of irrelevant pixels are changed, and many others of this kind)? You skipped over that, but it was my only point by which I was not merely speculating.

Regarding your reformulated story about why AI is good at chess and not football (I won’t paraphrase it because I seem to keep paraphrasing you wrong). I still think I am right: there are other and coincident stories about the differences between football and chess, that I mentioned above, that are just as plausible. It is an historical claim you raise that seems to me to be hard to answer.

Regarding your two falsifications. On the first, you will forgive me for never having heard of your paper, before this thread. At least for me, I will go into the beginning stages of carefully thinking about it. I don’t want to be unfair to you and say you can’t be right in that paper, if I haven’t read it. I also don’t want to say “I’ll read it right away!” and sound patronizing, or dismiss as impossible that you have resolved this longstanding problem. I will give this paper of yours a fair shot, on a timescale beyond this conversation.

On your second point, many people say that (again, popular scientists like Sam Harris say that constantly…this is why I keep saying your view is not unusual). What I don’t like about this one is there is too much wiggle room. If we reach some specified level of computing power, and the machines don’t meet some definition of intelligence, at that point you could just say “oh that is only because the computing power was not even greater…” (maybe you wouldn’t, but you could)…alternately, if machines are better than us at every task just as they are now at (say) being calculators, but still are bad at common sense generalizations…in that case we will be right back where we are now of you saying there is even more evidence you are right and me saying there is not. So this one aspect of your view seems to amount to “if machines have enough computing power to be intelligent, then they will be intelligent” but at the same time you are rejecting arguments, made in the present, that intelligence is not “computing” something. So at least on this one point, I don’t see how we make progress (in this conversation). I am open to how I am wrong.

To your last post, I assume you are the “person of inquisitive disposition”, not me, and that you are the one who “reality” instructs, not me. For my part, I am not going to get frustrated like your other interlocutors, so I would suggest dropping this sort of rhetoric–not because it is frustrating to others, or because in my opinion you are actually in the opposite role compared to what you think, like I said above…but simply because it doesn’t help any of your substantive points.

It is not just a game, anymore.

Having AI-only driven taxis, transporting thousands of people in dense traffic, involves the potential for billions of dollars in liability suits, if any people end-up getting seriously injured. I’m pretty sure those companies would not be entertaining such risks, unless they are confident of success; putting their money (lots of it) where there mouth is, so to speak.

As to what I think about games, such as GO, why persist in always pitting adolescent AIs, against only the world’s best humans? Why insist that in order to be “smart”, AIs cannot just beat your next-door neighbors, and thereby demonstrate mere human levels of “smartness”, but must instead demonstrate beyond-genius levels, by beating the only genius-level, game-playing humans?

Great. So now the humans

are being proclaimed to be victorious, even when they deviously resort to asking one AI how to beat another. How clever of them. I guess the AI really do have a few things to learn about behaving like a “smart” human.

It reminds me of the controversies over culturally-biased IQ tests; such tests are not objective evaluations – they are systematically biased to grading “smartness” in ways that greatly favor one party over another.

Why pit a tiny population of new-born, only recently-trained (and only in a sterile vat) AIs, against a “cherry-picked” tiny fraction of the human population, that have gone through many years of “training”, in order to perform “as humans”?

It took about ten years, for the people that built the “Grandmaster Chess Machine”, to go from beating mere Grandmasters, to beating the world champion; Mostly, because that was how long it took them to find a sponsor to actually fund the multi-million dollar cost of building the required, unique, purpose-built supercomputer.

Today, few humans could beat their smart-phone, much less a supercomputer, at chess.

But some games require a lot more computing power and real-world “training” than chess. The “Game-of-Life”, as played by humans, happens to be one of those. Why else do you suppose that so many humans are now willing to endure onerous student-loan-debts and subject themselves to twenty years of formal “training”, just to get an ante, into the Game of Life nowadays?

Given what I have already told you, you probably will not require more than a few minutes, to understand my simple point, and also why physicists “won’t touch it, with a 10 foot pole”:

If you take a nice, clean, polarized “coin” (as imagined/assumed by physicists), and paste a “QR-like code” onto it, then Shannon’s astonishing discovery, that “the transmitted signals must approximate, in statistical properties, a white noise”, will ensure that any pair of “entangled coins” that have the same “QR-like code” applied to them, will behave very differently (like Identical twins), in “Bell Tests”, compared to any pair of ” entangled coins” that have had a different, but similar “QR-like code” applied to them (Fraternal twins).

And that ability to distinguish Identical-twins from Fraternal-twins, will remain, even after each pair has been severely “band-limited” (been blurred beyond normal recognition) to ensure that they only manifest a single-bit-of-information, as defined by Shannon.

Regarding “Go”, whether the problem was discovered by software was not the salient point. The salient point was: the surprise that all (or most) of the different “Go” programs had this similar problem, and that it was again the problem raised decades ago, at the genesis of AI research, and present in many other applications (for example, failure of image programs to generalize when small number of pixels are changed, and many others…and not all of those flaws needed/need software to find them). It is clearly a general worry for your viewpoint. On this point, I have to say I don’t feel you are acknowledging it, and, if I can speak for you, you don’t seem to feel I am acknowledging your side, either. We seem stuck here.

Regarding the “competition”, you feel the conversation is framed/cherry-picked/”biased” against AI, but I could just as easily say the opposite (more easily, I would argue). We humans–and the non-human animals, and even the fungi–are not simply “better” than AI at nearly everything we do, it makes no sense to even talk about the AI being bad at them (not chess, not driving around a big city…those are a small set of what we do, and of course the animals do much different things, also intelligently). In this conversation, we are talking about (say) chess software, and about humans agreeing to step into the world of opening the chess application, entirely on the computer’s terms, assenting to all of the rules of chess enforced by the program…we are biasing the conversation toward those examples for the benefit of your view, not mine. Otherwise, we would have no shared examples to argue about. As I have said in every post, I have no disagreement with you that in every narrow domain in which computers can be taught to do something, they will perform far better than humans could ever hope. I am happy when they do something helpful to me, scared when they do something harmful to me, and feeling (like all of us) those narrow domains will dominate more and more of our lives. But I do not have this feeling of there being a “competition” which you seem to imply is important to the psychology of your opponents.

Finally, your idea of how self-awareness arises is extremely interesting, I have never thought like that before, and will think more in that direction in the future. Maybe, after a greater amount of thought, I will end up agreeing more with you on some of these other items. Regarding your last post, I will have to go to the paper and spend time. If it feels like I’m bailing on you because I can’t admit you are right (as you said above about another conversation), then please put yourself in my position. If I say you are wrong without reading your paper, you will not be convinced. If I say you are right based on this post, physicists would not be convinced and feel I am disregarding decades of physics based on a post. Rob, you are clearly a brilliant person who is thoughtful about a wide variety of issues. I don’t think you realize some of us experience pushback just like you, in our own way (like theories in biology which I don’t feel got a fair shot). For my part, I honestly disagree with you. I am not leaving the conversation in bad faith or because I can’t accept you are right. If you want to talk more about the self-awareness issue, or the physics issue, when I have had time to arrive at how I feel about them, please feel free to leave some contact and we will continue.

I leave the final word to you! Justin thank you for providing the forum for this discussion.

Thanks for the discussion.

As for the future of real AI, being of a certain age, I will not have to deal with it. At least not for long.

My advice to those that will: put the games away and start looking at Reality, through the new “lens” that Shannon discovered, thereby altering our view of Reality, just as it was altered 400 years ago, when first viewed through Galileo’s new lens. They both opened up a whole new way of looking at the world. And in that regard…

I had not thought about the Cosmic Microwave Background Noise, as a possible form of Low Probability of Intercept signaling, forming a Cosmic-Civilization-equivalent, of the “noise-like” signaling between neurons, until our conversation, triggered the thought. Thanks for that.

When at first you don’t succeed, try and try, not just again, but with a “shove”, rather than a “nudge”:

Consider Hume #30

Shannon discovered the solution to the very problem that Hume is addressing; How to transform merely probable arguments about existence, into virtually certain knowledge, about the existence, of things being experienced.

And it can be amazingly easy to acquire the necessary, certain knowledge; rather than wasting time searching for it; just create it yourself (“You” being Mother Nature), not quite out of “nothing”, but out of the autocorrelation properties of “noise” – virtual certainty, emerging, for the first time in the entire history of the chaos, the cosmos, out of a seemingly impossible source – noise – the only substance available in all the chaos!

Hume #26:

Shannon discovered that ultimate cause, for the existence of, the phenomenon of deterministic cause and effect itself, to be made (emerge) certain, rather than merely probable – the ultimate cause of all causes, of a deterministic nature – “the transmitted signals must approximate, in statistical properties, a white noise.”

Order from chaos.

Thanks for sharing this; I haven’t read the whole thing yet but it is a very useful broad overview and investigation.

Personally I am both a big booster of the social and economic power (and risk) of this technology, and absolutely sceptical of any claims about ‘intelligence’.

Clearly the meaning of words can be varied; obviously GPT4 etc. can do many of the same things as a human, and if that is what one means by intelligence then it is intelligent. When I use the term intelligence what I would usually mean would require conscious awareness.

All of these contemporary LLM technologies are predicting next word or word-token based on a huge training set of basically the whole internet, with a reinforcement learning layer of humans scoring responses on helpfulness. They can do this based on an immense and thoroughly trans-human capacity to do arithmetic, which means that they can happily work out the determinants of huge matrices in picoseconds etc. This bears no resemblance to what humans do, though it can produce similar or identical or better outputs.

If we want to use the word intelligence fine; I think that it is confusing very different ways of getting to a similar place.

So are zombies not intelligent despite being behaviorally identical to us?

You really should come up with a different basis for judging intelligence, because this conflation is bound to lead you astray and result in a failure to communicate, because this is not what is generally meant by intelligence.

Computers programmed to do such things can do them, but LLMs–a particular sort of program–cannot. GPT-4 can’t do basic arithmetic operations like multiplication and division beyond the examples in its training data.

No, it actually can’t–not generally speaking. E.g., it succeeds on problems that had been solved prior to the development of its database, and fails on problems solved afterwards. LLMs channel the intelligence encoded in the training data and as a result give the illusion of intelligence if one doesn’t poke too hard (and the authors of the article have vested interests and didn’t poke hard).

“intelligence” refers to the place we want to get to. The problem is to find ways to get there, and LLMs are a dead end in that regard.

A key would be how GPT based platform come to the solution. Is this from ‘reaonsoning’ ability or its simply churning out what it reads from the internet. I am sure GPT now was able to have some limitted reasoning and understanding of the questions, but the answers they throw out are fairly generic and answered by humans on the internet. It simply just compiles them and no different from google search. In this area how would you call this intelligence? A key difference to human intelligence is ability to reason abstractly and solve problem without “dumb training”, i.e. given a problem and solve it by just logical thinking based on information itself provided in question. Can gpt4 do that? my experience with chatgpt reveals it has 0 true reasoning skills by testing it on mathematical induction and some other IQ trick questions, it often throws something in the format of previously searchable results from the internet and tweak a few sentences, but obvious logical errors exits from the beginning showing 0 understanding at all, and this is not surprising. Given this, i highly doubt GPT4 would overcome any of it and claim itself as general intelligence