Microsoft Jettisons AI Ethics Team

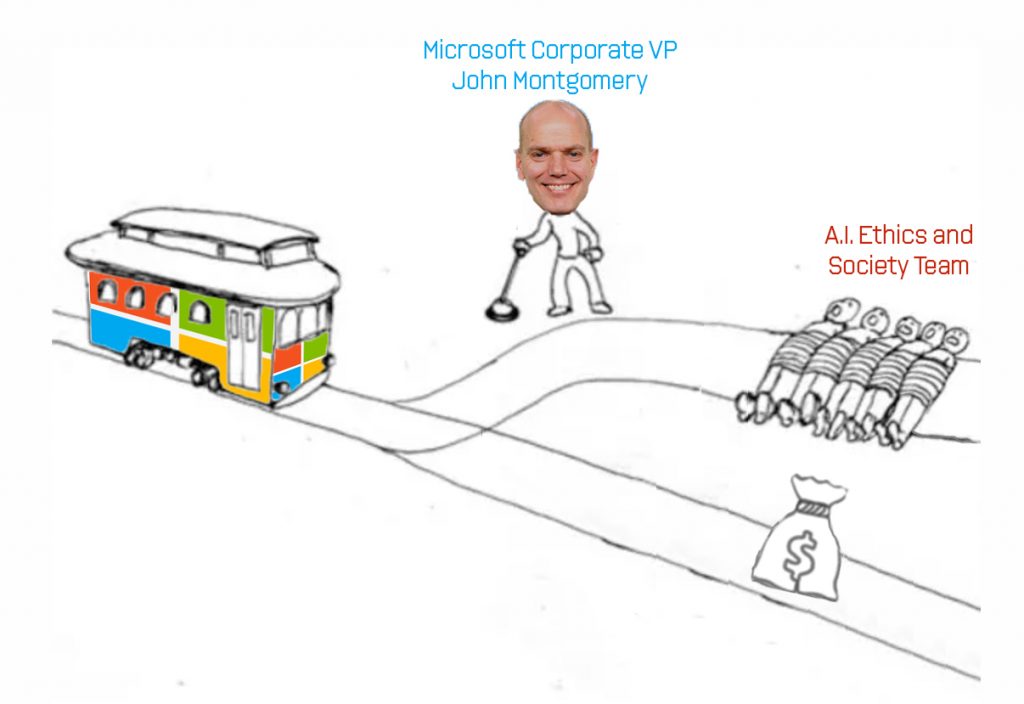

“Microsoft laid off its entire ethics and society team within the artificial intelligence organization,” according to a report from Platformer (via Gizmodo).

The decision was reportedly part of a larger layoff plan affecting 10,000 Microsoft employees.

Microsoft is a major force in the development of large language models and other AI-related technologies, and is one of the main backers of OpenAI, the company that created GPT-3, ChatGPT, and most recently, GPT-4. (Just the other day, it was revealed that Microsoft’s AI chatbot, “Sydney“, had been running GPT-4.)

Platformer reports:

The move leaves Microsoft without a dedicated team to ensure its AI principles are closely tied to product design at a time when the company is leading the charge to make AI tools available to the mainstream, current and former employees said.

Microsoft still maintains an active Office of Responsible AI, which is tasked with creating rules and principles to govern the company’s AI initiatives. The company says its overall investment in responsibility work is increasing despite the recent layoffs….

But employees said the ethics and society team played a critical role in ensuring that the company’s responsible AI principles are actually reflected in the design of the products that ship.

“People would look at the principles coming out of the office of responsible AI and say, ‘I don’t know how this applies,’” one former employee says. “Our job was to show them and to create rules in areas where there were none.”

In recent years, the team designed a role-playing game called Judgment Call that helped designers envision potential harms that could result from AI and discuss them during product development. It was part of a larger “responsible innovation toolkit” that the team posted publicly.

More recently, the team has been working to identify risks posed by Microsoft’s adoption of OpenAI’s technology throughout its suite of products.

The ethics and society team was at its largest in 2020, when it had roughly 30 employees including engineers, designers, and philosophers. In October, the team was cut to roughly seven people as part of a reorganization….

About five months later, on March 6, remaining employees were told to join a Teams call at 11:30AM PT to hear a “business critical update” from [corporate vice president of AI John] Montgomery. During the meeting, they were told that their team was being eliminated after all.

One employee says the move leaves a foundational gap on the user experience and holistic design of AI products. “The worst thing is we’ve exposed the business to risk and human beings to risk in doing this,” they explained.

The conflict underscores an ongoing tension for tech giants that build divisions dedicated to making their products more socially responsible. At their best, they help product teams anticipate potential misuses of technology and fix any problems before they ship.

But they also have the job of saying “no” or “slow down” inside organizations that often don’t want to hear it — or spelling out risks that could lead to legal headaches for the company if surfaced in legal discovery.

More details here.

(via Eric Steinhart)