Norms for Publishing Work Created with AI

What should our norms be regarding the publishing of philosophical work created with the help of large language models (LLMs) like ChatGPT or other forms of artificial intelligence?

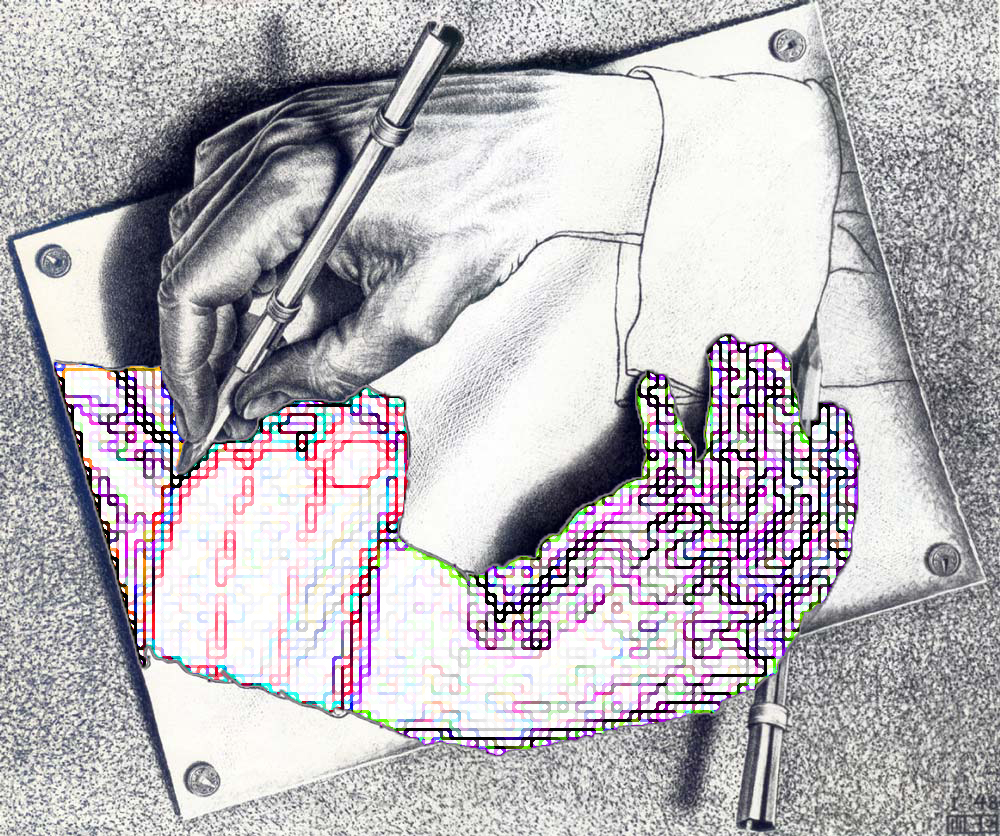

[Manipulation of M.C. Escher’s “Drawing Hands” by J. Weinberg]

First, no LLM tool will be accepted as a credited author on a research paper. That is because any attribution of authorship carries with it accountability for the work, and AI tools cannot take such responsibility.

Second, researchers using LLM tools should document this use in the methods or acknowledgements sections. If a paper does not include these sections, the introduction or another appropriate section can be used to document the use of the LLM.

A few comments about these:

a. It makes sense to not ban use of the technology. Doing so would be ineffective, would incentivize hiding its use, and would stand in opposition to the development of new effective and ethical uses of the technology in research.

b. The requirement to document how the LLMs were used in the research and writing is reasonable but vague. Perhaps it should be supplemented with more specific guidelines, or with examples of the variety of ways in which an LLM might be used, and the proper way to acknowledge these uses.

c. The requirements say nothing about conflict of interest. The creators of LLMs are themselves corporations with their own interests to pursue. (OpenAI, the creator of ChatGPT, for example, has been bankrolled by Elon Musk, Sam Altman, Peter Thiel, Reid Hoffman, and other individuals, along with companies like Microsoft, Amazon Web Services, Infosys, and others.) Further, LLMs are hardly “neutral” tools. It’s not just that they learn from and echo existing biases in the materials on which they’re trained, but their creators can incorporate constraints and tendencies into their functions, affecting the outputs they produce. Just as we would expect a researcher to disclose any funding that has an appearance of conflict of interest, ought we expect researchers to disclose any apparent conflicts of interest concerning the owners of the LLMs or AI they use?

Readers are of course welcome to share their thoughts.

One question to take up, of course, is what publishing norms philosophy journals should adopt in light of the continued development of LLMs and AI tools. Are there distinctive concerns for philosophical work? Would some variation on Nature‘s approach be sufficient?

Discussion welcome.

I’m not sure what the right norms are, but I lose respect for those academics that are keen to incorporate this mediocre writing aid into their craft. Have some self-respect.

I think disclosing the use of a LLM and maybe where it assisted and how. But past that, I think we should just let the norms develop on their own.

I asked ChatGPT to “write me an essay titled ‘Norms for Publishing Work in Philosophy Created with AI'”. Here it is:

Norms for Publishing Work in Philosophy Created with AI

In recent years, there has been an increasing interest in using AI to create philosophical works. While this new approach has the potential to revolutionize the field, it also raises new ethical and practical questions. In order to ensure that AI-generated philosophical works are taken seriously and given the same respect as human-created works, it is necessary to establish clear norms for their publication.

One of the first norms for publishing work in philosophy created with AI is transparency. The use of AI should be clearly stated in the publication, along with a description of the process used and the training data set. This will allow readers to understand the limitations and biases of the AI model and evaluate the work accordingly.

Another important norm is originality. AI-generated works should be original and not simply a copy of existing ideas. They should not plagiarize the work of human philosophers and should be able to stand on their own as new and innovative contributions to the field.

Thirdly, the AI models used to create philosophical works should be evaluated for their fairness and biases. It is important to ensure that the AI model does not perpetuate harmful stereotypes or reinforce existing inequalities. This can be achieved through regular evaluations of the AI models and the training data sets used, and making necessary adjustments to eliminate biases.

Fourthly, AI-generated works should be subject to the same standards of critical evaluation as human-created works. They should be rigorously examined for their accuracy, consistency, and logical soundness, and should be able to withstand scrutiny from the philosophical community.

Finally, it is important to recognize the role of human philosophers in the creation of AI-generated works. While AI can assist in the process of generating ideas, human philosophers should still play a central role in shaping and refining the work. The final product should be a collaboration between human and AI, rather than solely the product of an AI model.

In conclusion, as the use of AI to create philosophical works becomes more prevalent, it is important to establish clear norms for their publication. Transparency, originality, fairness, critical evaluation, and human involvement are all crucial factors in ensuring that AI-generated works are taken seriously and respected within the philosophical community.

I worked in the field of bioethics for many years, in which the mantra of “new and unprecedented” was invoked over and over. The result was to ignore the contributions of major figures (such as the two physician-ethicists who invented professional ethics in medicine, the Scot John Gregory (1724-1773) and the Englishman Thomas Percival (1740-1804) in the history of medical ethics, whose accounts remain relevant. The approach should be conservative: assume adequate norms (e.g., for confidentiality, informed consent, end of life care) already exist and do not need to be fashioned de novo.

LLM may be new and unprecedented technology but on this conservative approach its products are easy to classify: words taken from a source other than the author. The norm for doing so in philosophy is well established: enclose the words in double quotes and provide the reference. This applies to all work, whether of professors or students. Failure to do so constitutes plagiarism: representing the words or ideas of another source as one’s own.

Course syllabi should be clear that submitted work must be the student’s own, with the above proviso. If a student submits a text solely from an LLM it must be placed in quotes and the reference provided. The syllabus should state that, because work is not the student’s own work, it will be assigned a failing grade. This is no different from a student copying out verbatim the text of a reference work such as SEP and putting quotes around it. If a student submits a text from an LLM without attribution and is discovered, a failing grade should be assigned and the student reported to institutional authorities for a charge of plagiarism.

If an author presents such a text to a journal, it should be rejected. If an author presents an LLM not properly cited, then the paper should be rejected and the author informed he or she has committed plagiarism. If the paper gets published and is later discovered for what it is, the paper should be publicly retracted and the author publicly labelled a plagiarist and banned for life from future submissions. Publishers’ counsel should participate in the preparation of such policy.

In short, we already know what to do.

I think this is right, but I also think there’s a small wrinkle, given how things stand at present: part of the point of referencing the material we use is to enable independent verification. Attributing an LLM serves to differentiate its contributions, but it doesn’t enable anyone else to check the work, since one doesn’t know the discursive path used to prompt the answer that was given.

For the most part, this isn’t going to matter, but I can imagine the outlines of a future scenario where it might. I guess the current solution is for the researcher to save the log of their prompts, and maybe for them to make it publicly available? I don’t know.

“Words taken from a source other than the author”? That ship has sailed the moment we allowed grammar improvers and the like. We are firmly in the domain of a sorites paradox. I have read answers by ChatGPT that had a feel to them that was remarkably similar to things I had written myself – and I understand how the tone of the prompt can get it to do that. What are these words, if they are the same as the ones in principle as those I would have produced, or did? Mine? And on the other hand, what if I read its response, then sleep over it, then put something to paper based on what I remember. Whose words then? Mine? Yet, is that not what learning is?

I deeply agree with your concerns, as deeply as I disagree with the notion that there is a boundary we can simply apply. No, I don’t already know what to do.

If things were as easy as the editors of nature (and more recently Science) make it out to be, the world would be a very different place. In fact, the new LLMs confront us with the reality that there exists a continuum between non-intelligent tools and human intelligence. And ChatGPT is at a point somewhere on that continuum that is no longer indistinguishable from zero. I have laid that out in recent analysis for the Sentient Syllabus Project (cf. https://sentientsyllabus.substack.com/p/silicone-coauthors ) – but let me briefly comment on your three points.

(a) I agree; add to those points that – like it or not – our student’s job perspectives are going to be massively disrupted, the value of that part that ChatGPT could do (and that’s actually already a lot) has now effectively gone to zero.

(b) There is a technical problem here, in that we don’t document process while writing, only the result. It is likely that the only practical approach will be to simply assume that everything has been co-written, and focus on contents, not the hand that produced it.

(c) that point seems hardly relevant by comparison with others. For example, an aspect that is often overlooked is the equalization of access to scholarly discourse for non-native English users. There are many similar ethical aspects that are currently drowned out: the fact that neurodiverse learners now have access to an infinitely patient and non-judgemental tutor, or the fact that if the AI proclaims counterfactual statements, that is never done with malice. Moreover, I see a significant conflict of interest between the journal editors and the community, where restrictive authorship practices are engineered to support bibliometric performance assessments, rather than reflect an autonomous decision by the authors about how best to reflect the realities of their work.

Re. your final question – my analysis contains a concrete policy proposal as a first step.

Much more to be said, but thanks for picking up the topic!