Citation Rates by Academic Field: Philosophy Is Near the Bottom (guest post)

Academia’s emphasis on citation rates is “mixed news” for philosophy: it can bring attention to high-quality work, but tends to make philosophy and other humanities fields look bad in comparison with other areas, says Eric Schwitzgebel (UC Riverside), in the following guest post.

(A version of this post first appeared at The Splintered Mind.)

Citation Rates by Academic Field: Philosophy Is Near the Bottom

by Eric Schwitzgebel

Citation rates increasingly matter. Administrators look at them as evidence of scholarly impact. Researchers familiarizing themselves with a new topic notice which articles are highly cited, and they are more likely to read and cite those articles. The measures are also easy to track, making them apt targets for gamification and value capture: Researchers enjoy, perhaps a bit too much, tracking their rising h-indices.

This is mixed news for philosophy. Noticing citation rates can be good if it calls attention to high-quality work that would otherwise be ignored, written by scholars in less prestigious universities or published in less prestigious journals. And there’s value in having more objective indicators of impact than what someone with a named chair at Oxford says about you. However, the attentional advantage of high-citation articles amplifies the already toxic rich-get-richer dynamic of academia; there’s a temptation to exploit the system in ways that are counterproductive to good research (e.g., salami slicing articles, loading up co-authorships, and excessive self-citation); and it can lead to the devaluation of important research that isn’t highly cited.

Furthermore, focus on citation rates tends to make philosophy, and the humanities in general, look bad. We simply don’t cite each other as much as do scientists, engineers, and medical researchers. There are several reasons.

One reason is the centrality of books to the humanities. Citations in and of books are often not captured by citation indices. And even when citation to a book is captured, a book typically represents a huge amount of scholarly work per citation, compared to a dozen or more short articles.

Another reason is the relative paucity of co-authorship in philosophy and other humanities. In the humanities, books and articles are generally solo-authored, compared to the sciences, engineering, and medicine, where author lists are commonly three or five, and sometimes dozens, with each author earning a citation any time the article is cited.

Publication rates are probably also overall higher in the sciences, engineering, and medicine, where short articles are common. Reference lists might also be longer on average. And in those fields the cited works are rarely historical. Combined, these factors create a much larger pool of overall citations to be spread among current researchers.

Perhaps there are other factors a well. In all, even excellent and influential philosophers often end up with citation numbers that would be embarrassing for most scientists at a comparable career stage. I recently looked at a case for promotion to full professor in philosophy, where the candidate and one letter writer both touted the candidate’s Google Scholar h-index of 8 — which is actually good for someone at that career stage in philosophy, but could be achieved straight out of grad school by someone in a high-citation field if their advisor is generous about co-authorship.

To quantify this, I looked at the September 2022 update of Ioannidis, Boyack, and Baas’s “Updated science-wide author databases of standardized citation indicators“. Ioannidis, Boyack, and Baas analyze the citation data of almost 200,000 researchers in the Scopus database (which consists mostly of citations of journal articles by other journal articles) from 1996 through 2021. Each researcher is attributed one primary subfield, from 159 different subfields, and each researcher is ranked according to several criteria. One subfield is “philosophy”.

Before I get to the comparison of subfields, you might be curious to see the top 100 ranked philosophers, by the composite citation measure c(ns) that Ioannidis, Boyack, and Baas seem to like best:

- Nussbaum, Martha C.

- Clark, Andy

- Lewis, David

- Gallagher, Shaun

- Searle, John R.

- Habermas, Jürgen

- Pettit, Philip

- Buchanan, Allen

- Goldman, Alvin I.

- Williamson, Timothy

- Thagard, Paul

- Lefebvre, Henri

- Chalmers, David

- Fine, Kit

- Anderson, Elizabeth

- Walton, Douglas

- Pogge, Thomas

- Hansson, Sven Ove

- Schaffer, Jonathan

- Block, Ned

- Sober, Elliott

- Woodward, James

- Priest, Graham

- Stalnaker, Robert

- Bechtel, William

- Pritchard, Duncan

- Arneson, Richard

- McMahan, Jeff

- Zahavi, Dan

- Carruthers, Peter

- List, Christian

- Mele, Alfred R.

- Hardin, Russell

- O’Neill, Onora

- Broome, John

- Griffiths, Paul E.

- Davidson, Donald

- Levy, Neil

- Sosa, Ernest

- Hacking, Ian

- Craver, Carl F.

- Burge, Tyler

- Skyrms, Brian

- Strawson, Galen

- Prinz, Jesse

- Fricker, Miranda

- Honneth, Axel

- Machery, Edouard

- Stanley, Jason

- Thompson, Evan

- Schatzki, Theodore R.

- Bohman, James

- Norton, John D.

- Bach, Kent

- Recanati, François

- Sider, Theodore

- Lowe, E. J.

- Hawthorne, John

- Dreyfus, Hubert L.

- Godfrey-Smith, Peter

- Wright, Crispin

- Cartwright, Nancy

- Bunge, Mario

- Raz, Joseph

- Bostrom, Nick

- Schwitzgebel, Eric

- Nagel, Thomas

- Okasha, Samir

- Velleman, J. David

- Putnam, Hilary

- Schroeder, Mark

- Ladyman, James

- van Fraassen, Bas C.

- Hutto, Daniel D.

- Annas, Julia

- Bird, Alexander

- Bicchieri, Cristina

- Audi, Robert

- Enoch, David

- McDowell, John

- Noë, Alva

- Carroll, Noël

- Williams, Bernard

- Pollock, John L.

- Jackson, Frank

- Gardiner, Stephen M.

- Roskies, Adina

- Sagoff, Mark

- Kim, Jaegwon

- Parfit, Derek

- Jamieson, Dale

- Makinson, David

- Kriegel, Uriah

- Horgan, Terry

- Earman, John

- Stich, Stephen P.

- O’Neill, John

- Popper, Karl R.

- Bratman, Michael E.

- Harman, Gilbert

All, or almost all, of these researchers are influential philosophers. But there are some strange features of this ranking. Some people are clearly higher than their impact warrants; others lower. So as not to pick on any philosopher who might feel slighted by my saying that they are too highly ranked, I’ll just note that on this list I am definitely over-ranked (at #66) — beating out Thomas Nagel (#67) among others. Other philosophers are missing because they are classified under a different subfield. For example Daniel C. Dennett is classified under “Artificial Intelligence and Image Processing”. Saul Kripke doesn’t make the list at all — presumably because his impact was through books not included in the Scopus database.

Readers who are familiar with mainstream Anglophone academic philosophy will, I think, find my ranking based on citation rates in the Stanford Encyclopedia more plausible, at least as a measure of impact within mainstream Anglophone philosophy. (On the SEP list, Nagel is #11 and I am #251.)

To compare subfields, I decided to capture the #1, #25, and #100 ranked researchers in each subfield, excluding subfields with fewer than 100 ranked researchers. (Ioannidis et al. don’t list all researchers, aiming to include only the top 100,000 ranked researchers overall, plus at least the top 2% in each subfield for smaller or less-cited subfields.)

A disadvantage of my approach to comparing subfields by looking at the 1st, 25th, and 100th ranked researchers is that being #100 in a relatively large subfield presumably indicates more impact than being #100 in a relatively small subfield. But the most obvious alternative method — percentile ranking by subfield — plausibly invites even worse trouble, since there are huge numbers of researchers in subfields with high rates of student co-authorship, making it too comparatively easy to get into the top 2%. (For example, decades ago my wife was published as a co-author on a chemistry article after a not-too-demanding high school internship.) We can at least in principle try to correct for subfield size by looking at comparative faculty sizes at leading research universities or attendance numbers at major disciplinary conferences.

The preferred Ioannidis, Boyack, and Baas c(ns) ranking is complex, and maybe better than simpler ranking systems. But for present purposes I think it’s most interesting to consider the easiest, most visible citation measures, total citations and h-index (with no exclusion of self-citation), since that’s what administrators and other researchers see most easily. H-index, if you don’t know it, is the largest number h such that h of the author’s articles have at least h citations each. (For example, if your top 20 most-cited articles are each cited at least 20 times, but your 21st most-cited article is cited less than 21 times, your h-index is 20.)

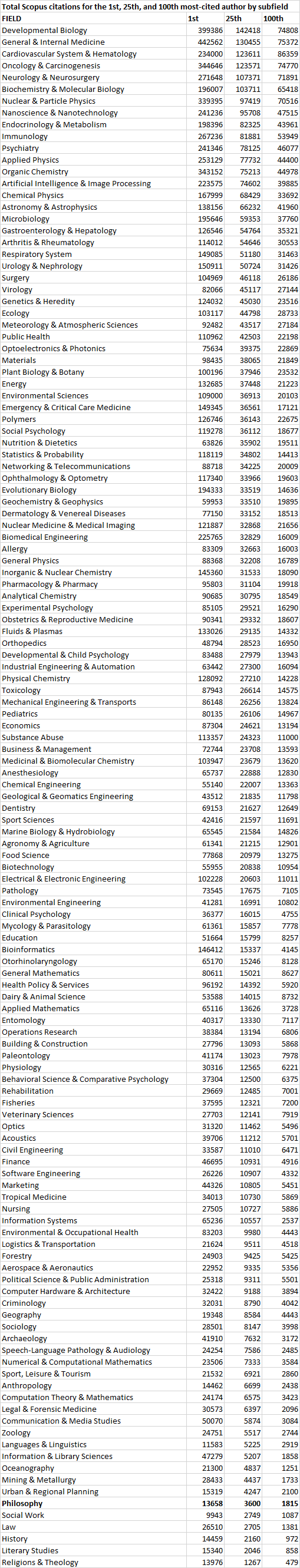

Drumroll please…. Scan far, far, down the list to find philosophy. This list is ranked in order of total citations by the 25th most-cited researcher, which I think is probably more stable than 1st or 100th.

Philosophy ranks 126th of the 131 subfields. The 25th-most-cited scholar in philosophy, Alva Noe, has 3,600 citations in the Scopus database. In the top field, developmental biology, the 25th-most-cited scholar has 142,418 citations—a ratio of almost 40:1. Even the 100th-most-cited scholar in developmental biology has more than five times as many citations as the single most cited philosopher in the database.

The other humanities also fare poorly: History at 129th and Literary Studies at 130th, for example. (I’m not sure what to make of the relatively low showing of some scientific subfields, such as Zoology. One possibility is that it is a relatively small subfield, with most biologists classified in other categories instead.)

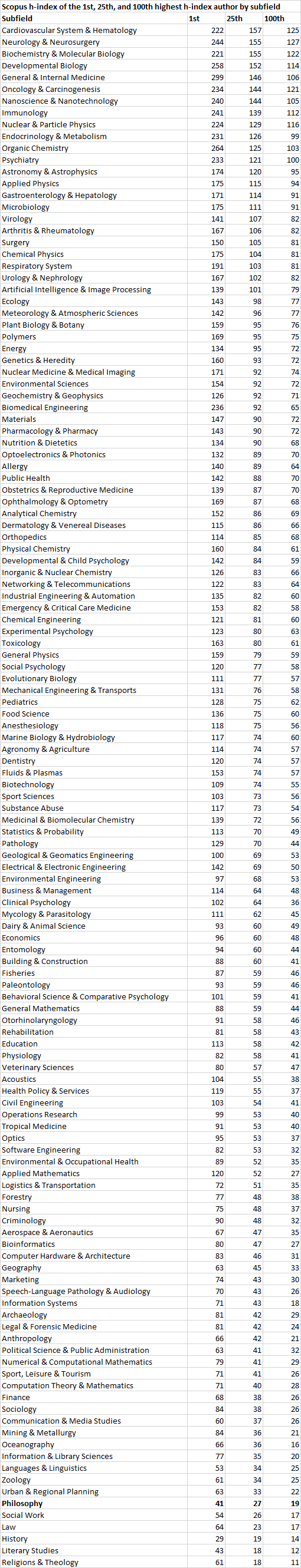

Here’s the chart for h-index:

Again, philosophy is 126th out of 131. The 25th-ranked philosopher by h-index, Alfred Mele, has an h of only 27, compared to an h of 157 for the 25th-ranked researcher in Cardiovascular System & Hematology.

(Note: If you’re accustomed to Google Scholar, Scopus h-indices tend to be lower. Alfred Mele, for example, has twice as high an h-index in Google Scholar as in Scopus: 54 vs. 27. Google Scholar h-indices are also higher for non-philosophers. The 25th ranked scholar in Cardiovascular System & Hematology doesn’t have a Google Scholar profile, but the 26th ranked does: Bruce M Psaty, h-index 156 in Scopus vs. 207 in Scholar.)

Does this mean that we should be doubling or tripling the h-indices of philosophers when comparing their impact with that of typical scientists, to account for the metrical disadvantages they have as a result of having fewer coauthors, on average longer articles, books that are poorly captured by these metrics, slower overall publication rates, etc.? Well, it’s probably not that simple. As mentioned, we would want to at least take field size into account. Also, a case might be made that some fields are just generally more impactful than others, for example due to interdisciplinary or public influence, even after correction for field size. But one thing is clear: Straightforward citation-count and h-index comparisons between the humanities and the sciences will inevitably put humanists at a stark, and probably unfair, disadvantage.

As someone who does a bit of work intersecting with another discipline, there’s an additional possible factor I’ve noticed reading those papers: other disciplines include citations for a much larger number of claims (to the degree that I often find myself thinking “wait, you thought you needed a citation for *that*?”).

I remember reading a paper about historical linguistics in Science and seeing that the first sentence was something like “People around the world speak many different languages. [1,2,3]”

The interesting thing is that philosophy seems to have more contested basic claims than other fields, so all else equal you would expect we would cite more for that reason. I wonder if part of the explanation is that philosophers often tend to be more incredulous/amazed that people would deny some of their basic views, despite the fact that they should be well aware that most everything in the field is hotly disputed. I repeatedly find referees who are shocked anyone would hold a view I reference, despite me providing citations of many people who do hold it. They seem unwilling to consider the possibility, or read the papers that provide evidence of its actuality. Weird stuff really…

This is just about comparisons with other disciplines.

Twenty-plus years ago, at my old University of Calgary, the Dean of Graduate Studies did the following. He looked at the total number of citations, over a 20-year period, for each department in the university. He then compared that with the total number of citations for all Canadian departments in that discipline, calculated the percentage of that total contributed by the Calgary department, and then ranked the university’s departments by those percentages. This in effect controlled for different citation practices in different disciplines. The result was that Philosophy, with a low absolute number of citations, ranked #3 out of 111 units in the university. Philosophy contributed 8.76% of total citations to Canadian philosophers in the period (it was 1981-2000) whereas Biochemistry (to take one example) had more than seven times as many citations but contributed just 4.13% of its total. Though being cited, in absolute terms, much less often than the biochemists, the philosophers came out higher in this ranking.

You can also do this by average citation impact, as the Dean also did. In this 20-year period the average Canadian biochemistry paper was cited 23.54 times and the average Canadian philosophy paper was cited 1.11 times. But the average Calgary biochemistry paper was cited only 21.19 times and the average Calgary philosophy paper 1.38 times, so the university’s philosophers were being cited more, relative to their discipline’s norms, than the biochemists, i.e. they were above their Canadian norm whereas the biochemists were below theirs. Here Philosophy ranked #9 in the university and Biochemistry #43.

The point is just that it’s possible to control for disciplinary citation practices and, when that’s done, Philosophy can look pretty good.

A quibble. It’s not the discipline that looks good when these methods are used, but a particular Department . . . the Calgary department, in your example, relative to the Calgary biochemistry department.

There’s another concern here, and that is about the philosopher rankings. For some reason, Scopus counts of philosophy papers seems unreliable. Just pick a few well-known names (your own included, Tom). Look them up in Scopus and see how they rank relative to each other. Then, compare with GScholar or Web of Science. It’s very different.

Also, there are inexplicable discrepancies. Evan Thompson, one of the most cited Canadian philosophers, has 6300+ citations. So he should be in the top 25. Yet, he is 50th on the above list. I also looked up Will Kymlicka. Scopus gives him ~2800 citations which puts him comfortably in the top 100. But he’s not on the list.

I agree with Mohan’s point here, but I also agree with Tom’s point that systematic field-relative comparisons are one way to *partly* deal with the issue.

I’d add a further quibble, though. I suspect that citation counts vary between subfields within philosophy in ways that systematically disadvantage some subfields relative to others. My guess is that philosophers whose work connects with empirical psychology look relatively good by these measures compared to other philosophers (explaining, for example, my own anomalously high rank), while historians of philosophy get many fewer citations relative to their impact. This might mirror trends we see in comparing philosophy with the sciences: philosophy of psychology has relatively more, and history of philosophy relatively less, article focus vs book focus, coauthorship, citation of current scholarship, and uptake by adjacent high-citation fields.

Mohan, the “top 100” list of philosophers above isn’t ordered by total citations but rather by a complex composite score c(ns) which excludes self-citations, includes separate components for total citations and h-score, and reduces the value of citations for co-authorship (especially for non-first-authorships). Compared to other philosophers, Thompson has a very high number of citations, but his h-index adjusted for co-authorships is comparatively lower.

Mohan: I was giving a specific example to illustrate a more general point, that it’s possible to counteract, to some extent, the low citation rates in philosophy by controlling for the differing rates in different disciplines. The Calgary data were just the illustration. And Eric’s post actually starts by talking of, and worrying about, administrators using citation data as evidence of scholarly impact. I assume these are administrators at one university evaluating units at just their university, as the Calgary Dean was.

I don’t know which citation data he used, though I suspect (this was around 2001) it was the Web of Science or ISI (or whatever it was called then). And I agree that different data bases can yield different results, with, the one time I looked at it, Scopus seeming especially unreliable. When I’ve been on our university’s committee for selecting University Professors it’s been understood, especially by the scientists, that Google Scholar will give the highest h-index for a given person, the Web of Science the next highest, and Scopus the lowest.

Eric: I entirely agree about different citation rates in different subfields of philosophy, and that would be very hard to control for. But if one issue is administrators using citation data to assess departments in their own university, as the Calgary Dean was, it may not be such a big problem for that. Isn’t the distribution among subfields at least roughly the same in most Philosophy departments, i.e. they all have historians of philosophy, philosophers of mind, etc. in roughly the same proportions? If so the differences between subfields will be washed out in assessments of whole departments.

I don’t think that last point will be true, at least in the US. There are departments like, for example, Washington University of St Louis, that have tried to create research clusters in particular fields. In Wash U’s case, they’ve created a really impressive group in empirically oriented philosophy of mind.

Now if you do specialise in that, you’ll do very well by a citation metric. But a department that builds up a speciality in, say, history of philosophy (as Cornell did for a long time, and I guess still does) will be penalised by citation counts even if you normalise to what’s common in philosophy.

It’s true that if every department has a similar mix of what people work in, as is kind of true in some countries, this kind of normalisation will work. But the US is a going to be a special case.

Maybe philosophers should learn to give more credit to others who have contributed to the same debate. At least when reviewing for journals, I find that a noticeable portion of papers that came to me have ignored relevant papers that could be easily discovered by googling keywords, like titles literally containing the keywords.

Philosophers ignoring each other also sometimes leads to poor research quality, like constantly reinventing the wheel. But of course this may also just be reviewers and journals acting irresponsibly and accepting papers that ignored obviously relevant research.

Your last paragraph is particularly salient. We simply don’t have the time (and are not adequately compensated) to do peer review that would catch omissions of this kind. I believe this is also exacerbated by the fact that we are often asked to review articles that are only tangentially related to our areas of niche expertise.

This worries me deeply. It does not inspire confidence in the originality, accuracy, or meaningfulness of our research if we can neither slow down and make the time to read broadly, nor provide genuinely comprehensive, expert feedback to our peers. I truly wish we (especially members of hiring committees and funding bodies) would reconsider the incentives that define success and structure labor in our field.

I agree on all points.

On a related note, I recently had a referee report that complained that I had cited *too many* relevant works in a few of my footnotes. I was citing those in the literature who hold the views I was referencing so that it was clear I wasnt strawmanning (not to mention it helps future scholars see the literature and dialectic, and saves them time, etc). And of course the other referee spent a paragraph complimenting this very same practice…

Does anyone else ever read these long, tiresome meditations on citation counts and feel we’ve lost our way? (Not intended as a slight against the author of this post.)

Surely some do feel this way. But if we want to justify our funding, it’s also important to explain to our funders, often taxpayers, how impactful our research is.

I guarantee you that the average taxpayer does not know, much less care, what a citation count is. If I wanted to explain the importance of my work to a layperson, the last thing I would say is: “some other academics mentioned me.” I would describe the work.

Funding agencies know what these are, but only because they are staffed by academics and career bureaucrats who decided the convenient way to measure “impact” was a relatively arbitrary number.

Not to be a buzzkill, but when I say we’ve lost our way I mean I see this empathsis on careerist nonsense instead of just trying to produce good work. Even if you find this game a grotesque distraction from doing actual philosophy, you have no choice but to play in order to get a job.

In an attempt to convince a non-academic of the importance of your work, you might not tell them “some other academics mentioned me” but also would not want to tell them “my colleague has been cited 100 times and I have been cited 10 times, but our work is equally as impactful” (I doubt the average non-academic would find this plausible, and rightfully so). And you probably wouldn’t engender much confidence in the methods, reliability, etc of your field if you were to say “I don’t think it’s necessary or important to cite work relevant to mine”.

As for citation counts being relatively arbitrary, I am not sure what you mean. If you mean, per the post above, that they are field- and subfield-dependent in various ways, that seems true. But if you mean they don’t correlate fairly highly, internal to a field (or subfield or whatever), with impact, I’m not sure what the evidence for that would be. Indeed, the post above starts out with evidence to the contrary, though of course there are some caveats (no one is arguing citation counts are the whole story).

Finally, you seem to think that it’s clear what is “good work” and what is “actual philosophy”, but the issue is that other people think it’s clear that you are wrong about precisely the same philosophy (i.e. they think what you think is good work is complete trash). One way to move forward from this clash of judgments is to see what a broader swathe of the relevant experts think. Enter, e.g., citation counts…

Re: your first point, why wouldn’t this only show that citation counts are compelling *when compared against other citation counts*? Agreed that no one would admit to their *lower* citation counts. But the point was that laypeople wouldn’t be compelled by citation counts in the non-comparative sense. I can see that it’s better to collect rare stamps if you’re a stamp collector—but that still won’t make me care about stamps. Mostly agreed about the other replies, though.

As for OP, I think the “we’ve lost our way” stuff is better aimed at the professionalization of philosophy in general. Given that we *have* professionalized, citation counts don’t seem arbitrary. But, like, maybe industrial-grade philosophy isn’t so… necessary or valuable. Idk.

I took Grad Student to be opposed to citation counts being compelling or relevant in general, whether comparatively used or not. Further, my second example (second hypothetical statement to a non-academic) covers the non-comparative sense because it indicates that it is not compelling to have an attitude where thorough citation practices and thus citation counts are irrelevant in general.

I don’t see how Grad Student could be charitably read in the comparative sense, but oh well.

As for the second example, I don’t think it’s tracking the importance of citations. A layperson who knew little about philosophy would probably just find it arrogant. It’s not like she would find it unconvincing because she agrees that citations matter.

This just seems like a quibble to me. An engineer can justify herself to a layperson by saying “I made this gizmo, which you use everyday.” A city planner can say “I made your commute easier.” An artist can say “I painted that mural downtown.” Etc. An academic philosopher saying “I’m cited a lot by other philosophers” is just obviously lame in comparison, and that’s also obviously what the OP meant. That’s not to say that everyone else always has convincing reasons (maybe a lot of gizmos suck), or that philosophers have no alternative justifications (I think they probably do). It’s just to say that the point about using citation rates to justify one’s work to taxpayers is pretty dissimilar from these other, more clearly compelling, responses.

Well, Grad Student claimed “that the average taxpayer does not know, much less care, what a citation count is”, and if they would care in the context of hearing and evaluating a comparative claim about citation counts, then they would care about citation counts to that extent. My point is that the hypothetical statement Grad Student picked wasn’t a great one to reveal whether a non academic would care about citation counts. Not sure what is so uncharitable here.

Also, the comparative sense is precisely the sense that is often relevant for funding, given that taxpayer money is finite and choices need to be made about which fields deserve the money. Grad Student’s response, involving the hypothetical statements to non academics, was in response to read more cite more’s point about funding.

As for the rest of your comment, I think, like Grad Student, youve picked another hypothetical statement that minimizes the position read more cite more is espousing. Again, as part of a broader case for the importance of philosophy (that doesnt just rely on claims about citation counts—no one is claiming this should be the full story), citation counts can play a compelling role in justifying the impact of philosophy on other disciplines, the quality of philosophy’s disciplinary scholarly norms, the productivity of philosophers relative to one another and other fields, etc.

The main mistake is agreeing with deans to play these silly games.

We need to get scientists to cite less!

Exactly. And we certainly don’t need to move to papers in which there are massive bibliographies full of papers that are barely engaged with.

Tom (and Mohan)

there is something a bit odd about the Calgary dean’s method that is worth noting. In the aggregate, the difference between a paper from the average Canadian philosophy department and a paper from the UofC philosophy department is about 0.27 citations. The difference in the case of biochemistry is 2.35 citations. These are the sorts of differences that do not really matter – they are noise in the system. I hope decisions about policy and resources allocation were not made on such small (and insignificant) differences. I think citation data can be insightful. But in this case it is not. (I speak as yet another person who once worked at Calgary)

Decisions about resources weren’t, as I recall, based on the data. The data were ignored. And perhaps the main use of them I was envisaging is defensive: some department says they deserve more resources than Philosophy because their research has more impact — look at the number of our citations! — and that can be countered by controlling for disciplinary practices. Shouldn’t we know how to do that?

And are the differences you mention so small? That 0.27 citations difference means the average Calgary philosophy paper had 24% more citations than the average Canadian one. Is +24% so small? The average Calgary biochemistry paper had 90% as many as the average Canadian one — a 34% shortfall from Philosophy. Another department that prided itself on its research excellence, and had persuaded the administration of that, had 69% of the average citations per paper in their discipline; the Physics department had 62% of the average in theirs. Is a difference between +24% and – 31% or -38% too small to matter?

I wouldn’t put much weight on a small difference of this kind, e.g. +24% vs. +22% but I don’t see why large differences of the kind above aren’t indicative of something. Not of everything, of course, and not unquestionably indicative given what you rightly note is the arbitrariness or noise in the system. But how plausible is that a 62% gap is just the result of noise?

I worth my place too in the index of philosophers.

In my area of continental philosophy cites of scholars like Judith Butler are as common in literary, gender, and sexuality studies and in critical race and political theory as in philosophy (seemingly narrowly construed). Perhaps the humanities taken as a whole would make for better comparisons. After all, Butler recently served as president of the MLA.

Wendy Lochner Columbia UP