The Guardian’s Undergraduate Philosophy Rankings

The Guardian has published its 2019 “University Guide,” a set of rankings of schools aimed primarily at undergraduate students. The guide includes discipline-specific rankings, including for philosophy.

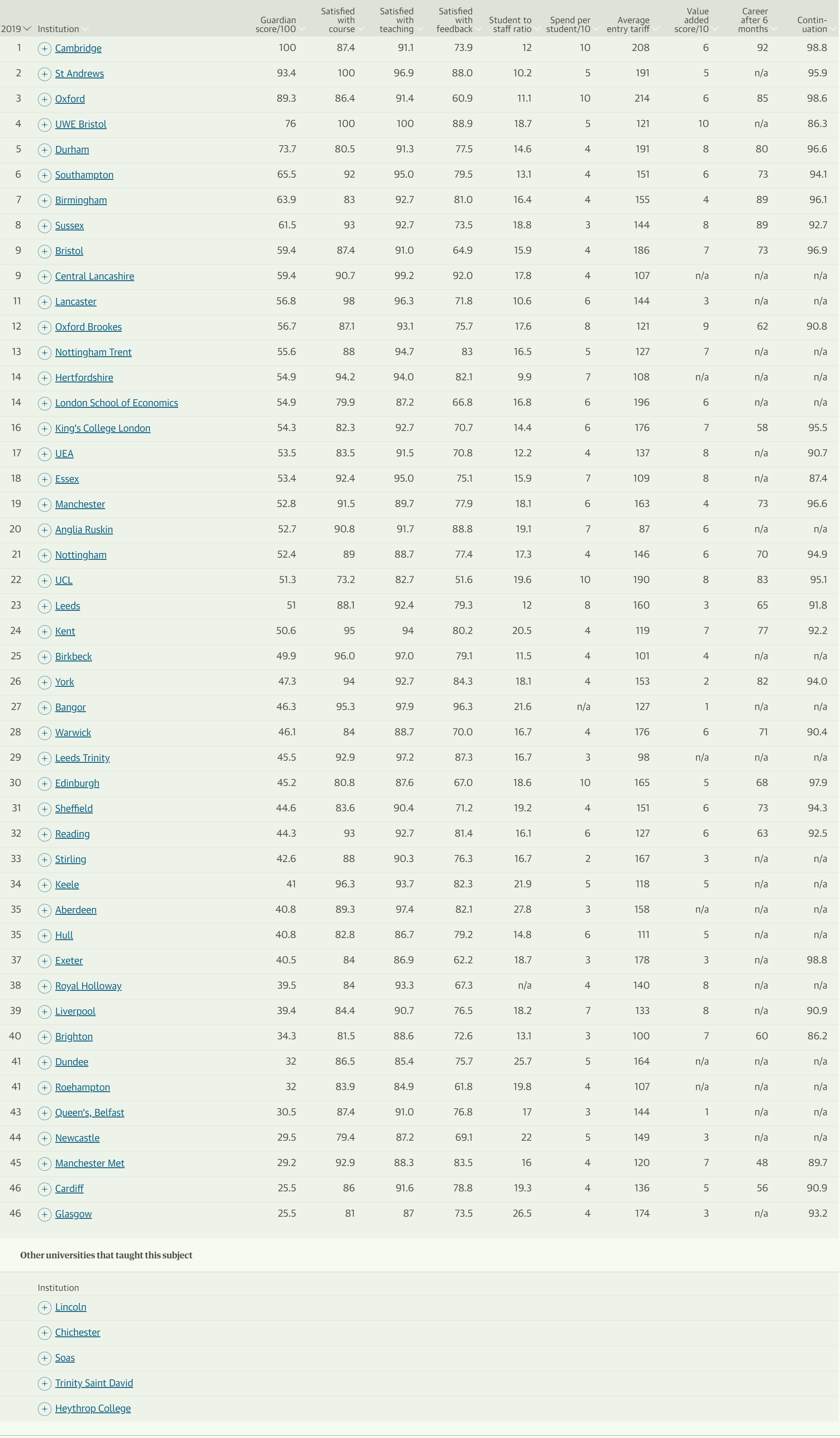

Each department’s “Guardian Score” is based on a combination of factors, including final-year student evaluations of the course of study and the teachers, the staff-to-student ratio, the money spent on each student, the academic profile of the average entrants to the programs of study, an assessment of how much students learn in the program, the extent to which graduates of the programs are employed or are pursuing graduate degrees, and the extent to which first-year students continue to study the subject in their second year.

Here are the philosophy rankings:

You can view the rankings on The Guardian‘s site, here, and further information about the methodology here.

As nice as it is coming 6th, these tables are worth taking with a grain of salt. For example, I believe that “Value added” is calculated effectively by comparing the A-level grades of your entrants with the degree classification (1st, 2:1, etc.) that they come out with. One obvious way to score well on that metric is to just start handing out higher grades.

(To be clear, a university might do well on this measure genuinely because it *is* teaching well and giving out *deserved* high grades. But the measure wouldn’t discriminate between that and a department that just adopts an explicit policy of grade inflation.)

Just to be clear, these rankings are nothing like the Leiter Rankings, which are based entirely on the reputations members of departments have amongst their colleagues who are doing the ranking. The Guardian league table supposedly represents the quality of education an undergraduate would get should she enroll and complete one of these philosophy courses. How is that measured? A large component of this – 25% – are National Student Survey (NSS) scores, which are just normal student evaluations, but focused on the overall course instead of a specific class/module. Also important is the confusing “Value Added” category (15%). That last category is effectively this: are students doing worse than, the same as, or better than expected in the final classification (i.e., in their final GPA) *given their A-level (or equivalent) scores* (i.e., given their scores on the standardized test they took in order to apply for university). This approach benefits schools with mark inflation, and it also benefits schools that admit very, very privileged students (since these students are probably as likely to receive high marks in university as they are to have received high marks in their entry qualifications).

Finally employment prospects – graduate employment or postgrad positions at six months after graduation – contributes 15% to the final score. It’d be interesting to hear whether people think that is high.

For more methodology, see here: https://bit.ly/2xlIpMM

You can also download an excel file that has some of the data.

I’m biased because of my 5 years at Leeds’ school of philosophy (religion, and history of science), which I think is ridiculously underrated in philosophy on this league table. It’s is a pretty remarkable place to get an education. But then again, a lot of rather obviously excellent philosophy courses are ranked below Leeds’ (Edinburgh, e.g.), so it seems to me that something has gone terribly wrong with this league table.

It is hard for me to take seriously.

One last thought: I judge league tables to express values at odds with a serious commitment to university education. Not going to go into it at length here, but rankings, in general, strike me as reflecting a failure to seriously consider the heterogeneous and competing values of university education. The Guardian rankings, with their corporate-speak (“value added”, “career prospects”) and their heavy reliance on student surveys, which reflect less students’ actual experiences and more their expectations and how those have been managed, reduce a three-to-four-year complex university education into a number – an exchange value, one might say. It’s commodification at its liberal peak, and it’s a shame. (It sells clicks, though, and the Guardian is just one of many papers that publishes such league tables.)

The Guardian’s league tables are shockingly bad for philosophy, and have been for at least the last several years. To take one example among many: no, Nottingham Trent is not a better place to study philosophy than KCL. Prospective undergrads are far better off simply sorting by average UCAS entry tariff. That’s not a great measure either, but better than this nonsense.

I hope no prospective undergraduates take this too seriously. Unfortunately, some probably will. I know this site likely isn’t read by too many prospective undergrads, but Justin, if you feel the need to point out concerns with the methodology of the PGR, then I really think you should include something similar here. These rankings could seriously mislead prospective undergrads about the state of philosophy departments in the UK. Prospective undergrads are incredibly vulnerable and susceptible to misinformation, especially since they often have no philosophy teachers to advise them – philosophy A level is still quite rare.

“Each department’s “Guardian Score” is based on a combination of factors, including … the extent to which first-year students continue to study the subject in their second year.”

It’s actually based on the extent to which first-year students remain on-course at all in the second year. In the UK, you (mostly) apply to do a specific subject, and then do that subject all the way through. The US model of applying to a university, selecting what to do when you’re there, and putting together a degree from a mixture of subjects, doesn’t happen much in the UK.

(But the overall rankings are in any case laughably unreliable, so it’s not like it matters much!)

Waaaay back in the day, when I was Head of Philosophy at Leeds, I asked a colleague to take a look into the mechanisms behind The Guardian’s rankings. He discovered that the overall ‘score’ and therefore relative ranking was extraordinarily sensitive to quite small changes in the scores recorded in the various categories – e.g. the ‘National Student Survey’ score, which is notoriously fragile. I wrote to the newspaper about my colleague’s findings but didn’t ever get a satisfactory response. “Laughably unreliable” just about sums it up!

(Ps Thanks Matt!)