Philosophers Awarded Over $500,000 To Study Autonomous Vehicles

A group of three philosophers and a civil engineer have been awarded a grant from the National Science Foundation (NSF) to “construct ethical answers to questions about autonomous vehicles, translate them into decision-making algorithms for the vehicles and then test the public health effects of those algorithms under different risk scenarios using computer modeling,” according to a press release posted at Phys.org.

The principal investigator on the project, “Ethical Algorithms in Autonomous Vehicles,” is Nicholas Evans, assistant professor of philosophy at the University of Massachusetts, Lowell. He will be working with Heidi Furey, assistant professor of philosophy at Manhattan College, Ryan Jenkins, assistant professor of philosophy at California Polytechnic State University (CalPoly), and Yuanchang Xie, assistant professor of civil and environmental engineering at University of Massachusetts, Lowell.

The grant is for $556,650 and is expected to fund three years of work.

Here’s the abstract of the project:

This project supports a team of researchers, including experts in moral philosophy and transportation engineering, who plan to address ethical issues that arise in the development of computer algorithms for autonomous (self-driving) vehicles. The project will address concerns over the expression of ethical values in self-driving vehicle when (for example) the vehicle detects an imminent and unavoidable crash and must select among navigation options, such as colliding with a crowded bus or with a lone motorcyclist. It aims to develop algorithmic representations of ethical decision-making frameworks for autonomous vehicles, and then model these frameworks over a range of contexts for their effect on risk management in traffic networks and for their expected public health impact. The overarching aim of the project is to promote principled approaches to ethical decision-making in complex, autonomous systems, and to prepare designers and engineers to encode ethical behavior into such systems. Research mentoring is to be coordinated through the host institution that will enable undergraduate and graduate engineers to participate in the project. A summer intensive will bring together students from diverse backgrounds to work with the project team and invited scholars. A postdoctoral fellow will conduct research on the ethics of autonomous vehicles, and assist in project administration.

The project has two specific aims. It aims to develop ethical algorithms that can be converted into computer code for use in autonomous vehicles. Team members will review the literature on existing decision-making for autonomous vehicles, and then develop conceptual, algorithmic representations of ethical behavior that can be converted into computer code. They will also model changes in traffic risk profiles and expected public health impact of these algorithms; specifically, they will apply injury severity and crash frequency modeling using algorithms developed in meeting the first aim in a range of traffic contexts and market penetration scenarios. They will also develop a model for the expected public health benefits from these algorithms using model of resource and time-based triaging as a starting point. Both aims support training of scholars and practitioners sensitive to the ethical implications of autonomous vehicles. These pedagogical aspects have been designed to promote diverse interactions between STEM students and practitioners, and they will serve to improve STEM education and educator development.

Why should we even want this technology?

It’s being shoved down our throats without any real discussion, as if we are simply to accept that one day we will have to live in a society where we’re ferried around by a machine like we’re little children at an amusement park. Sad.

There are plenty of people who already pay to be ferried around by humans, whether in taxis, buses, trains, or even ferries. Machines are much cheaper than human drivers, so lots of people would want this. Right now we are (at least, in most parts of the United States outside of a few dozen square miles at the heart of the largest cities) forced to live in a society where we ferry ourselves around in heavy machines – plenty of people would at least consider it a step up to not have to pay attention to the dreary job of road safety, even if this isn’t as much of an improvement as suddenly having walkable neighborhoods with transit access to larger cores.

I would be surprised to discover that most people wouldn’t prefer letting the car drive itself in most circumstances, and the companies that are spending millions researching this seem to think enough people will prefer it that they’ll some day be able to recoup their investment. I don’t think anyone is forcing this technology on anyone else – at least, no more than automotive technology is *generally* forced on most of us at present.

I’m not sure *exactly* what the original commenter had in mind. And it’s true that the grant is a great feather in the cap of these philosophers, and philosophy more generally. And it’s true that we want philosophers to get these feathers in a social climate where academia is threatened.

But I find it disappointing that a potential critique of capitalism is so quickly overlooked in this case. Relative to other needs, say funding for basic sanitation and vaccines in some places of the world, we really DON’T need this technology. Sure it’s a step up. Do those of us in the global North really need more of these steps up? Aren’t we extremely privileged to have cars of any sort in the first place? And isn’t that very privilege what’s driving climate change that disproportionately harms people in the poorest places, i.e., those potentially without sanitation and vaccines?

Sure, beneficence is not what capitalism’s about. Sure, under capitalism, this technology might make sense, i.e. represent some kind of benefit. But the point is not to evaluate this technology *in capitalism’s terms.*

Hi Kenny,

I would like to qualify my original comment by drawing a distinction, one on which blah blah blah. On this modified reading, blah blah blah. That, I hope, lends some credence to my original–though now modified thesis–that blah blah blah*.

There is empirical support for blah*. For instance, according to Smith, Levins, and Terry (2010), blah blah blah. Indeed, this finding was strongly corroborated by a later study conducted by Craig, Williamson, and Jenson (2014), which showed a statistically-significant correlation between blah blah and blah blah blah.

I think, then, if we restate your argument in formal premises it might look like something this:

1) Blah blah blah and blah blah blah, so probably blah blah blah.

2) If blah blah blah, then blah blah blah.

3) Blah blah or blah blah blah

4) Not blah blah

5) So blah blah blah

Ergo, probably blah blah blah

This is all to say that, after reading your post and reconsidering my original position, I now see that you are right and recognize the error of my ways. Everyone should just get in the car and don’t ask questions because apparently that’s what we’re all going to be doing anyway regardless of what anyone says anyway. blah.

One question immediately came to mind here—when it comes to road rage, quo vadis?

Perhaps one might say that autonomous vehicles will eliminate it since vehicles will regulate traffic in a safe and rational way. I’m quite skeptical about that take. At least in the interim when streets will be filled with an assortment of driver and driver-less cars, no doubt plenty of aggressive drivers will become inflamed by the same sort of rational and safe driving from autonomous vehicles exhibited by some drivers now that results in tailgating, unsafe passing, and worse. Add in factors in like so many states increasingly allowing permit-less concealed-carry of firearms, and a rise in road-rage shootings targeting autonomous cars and their passengers seems likely. Even when autonomous cars becomes the norm, either passengers will become frustrated with their own vehicles and their “stupid rules” and take it out on them in violent ways that still might result in accidents, or, more likely, take advantage of illegal software overrides to effectively remake their autonomous vehicles into “old-school” driver-controlled cars.

Yeah, you can change the car–but you can’t change the human nature of car owners. But maybe cars can be programmed to sedate or neutralize belligerent owners. I for one would welcome such automobile overlords–given what I see on the roads now.

Is there any reason to think that philosophers are valuable for this kind of thing? I mean, except for the value of serving as a fig leaves for the corporations developing the vehicles? “But we hired moral experts to design the algorithms, so clearly we weren’t negligent, your honor!”

I have to admit I’m a bit confused as what kind of extensive role philosophers could usefully play in such a project.

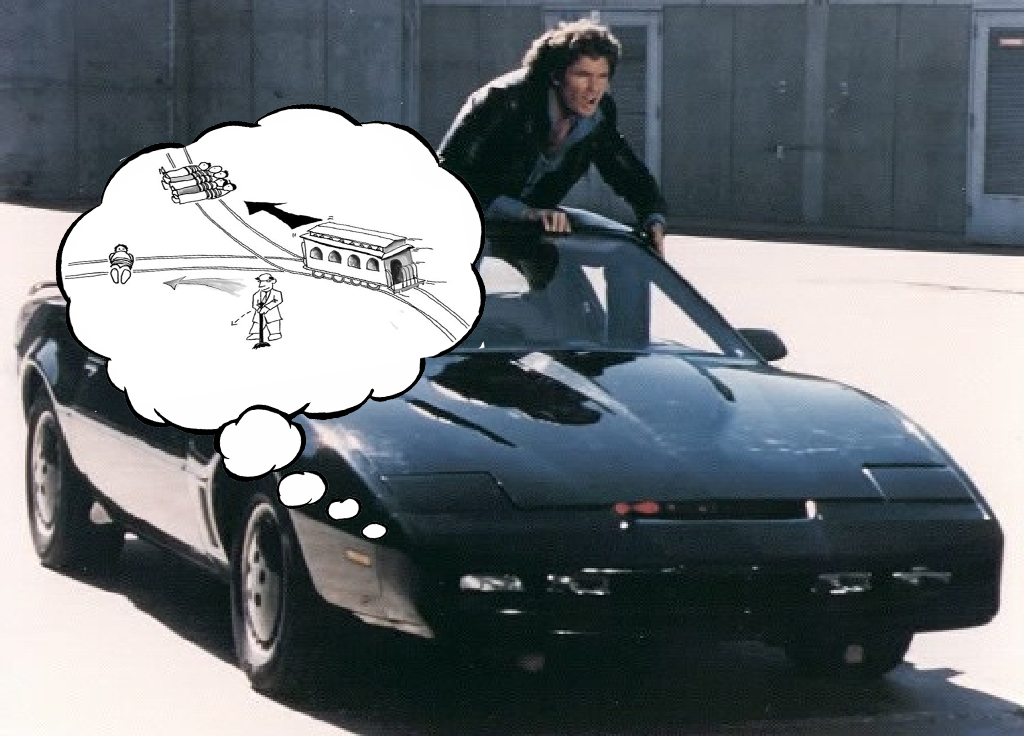

Yes, AI cars face trolly problem type issues but $500,000 dollars isn’t going to resolve the continuing philosophical debate about what the right answer is. Ultimately, you need to simply *pick* a philosophical position about what the right way to make such decision is and implement it and its not clear what kind of novel philosophical research can offer over and above what is already in the literature (not that there isn’t plenty more to say generally about the trolly problem) .

Its not at all clear to me how the fact that these trolly problem type cases are suddenly closer to being actual rather than merely hypothetical particularly moves the issue forward but its plausible I’m missing something. Of course, even if the philosophers just do bog standard traditional moral philosophy I’m happy to see them get 500k but I’m just confused about the supposed particular value.

—

As a pure utilitarian I believe they just need to come up with an algorithm that does a good job of generally choosing the outcome with the highest utility and that will mostly be a job for programmers and the people who do the statistical work of computing QALYs. So maybe its this perspective which is causing me to miss what kind of added value this work has over the extensive existing literature on trolly problems.

I’ve given this a lot of thought. About 3 minutes.

After $556K has been spent, the answer will be: randomize the outcome of a non-desired event. Having an algorithm that selects one victim over another; or even one of many; the chosen victim will be an oppressed group. The algorithm will need to be an open road map to allow people to choose which mode, path, and destination to choose.

Where can I find someone to give me $500K for this post, please?