Citation Patterns Across Journals (guest post by Brian Weatherson)

“Anything can happen in a small sample, but it was enough to suggest to me a hypothesis: There is no such thing as a generalist philosophy journal.”

The following is a guest post* by Brian Weatherson, Marshall M. Weinberg Professor of Philosophy at the University of Michigan. It originally appeared at his blog, Thoughts, Arguments, and Rants.

Citation Patterns Across Journals

by Brian Weatherson

I’ve been interested for a while in the different things that get attention in different philosophy journals. Part of what got me interested in this was looking at the ways that different well known articles get cited, or not, in different journals. Below is a table showing how many times (according to Web of Science), six prominent turn of the century articles were cited. The articles are:

- Elizabeth Anderson, What is the Point of Equality, Ethics, 1999

- Peter K. Machamer, Lindley Darden & Carl F. Craver, Thinking about Mechanisms, Philosophy of Science, 2000.

- David Lewis, Causation as Influence, Journal of Philosophy, 2000.

- Michael G. F. Martin, The Transparency of Experience, Mind and Language, 2002.

- James Pryor, The Skeptic and the Dogmatist, Noûs, 2000.

- Jason Stanley & Zoltán Gendler Szabó, On Quantifier Domain Restriction, Mind and Language, 2000.

Here are the 21 journals these six papers have (collectively) been most cited in, along with a count of how often they were cited in each.

| Journal | 1 | 2 | 3 | 4 | 5 | 6 |

|---|---|---|---|---|---|---|

| Phil Studies | 3 | 7 | 15 | 19 | 51 | 19 |

| Synthese | 0 | 50 | 8 | 4 | 23 | 9 |

| Phil of Science | 0 | 69 | 4 | 0 | 0 | 0 |

| PPR | 1 | 4 | 5 | 10 | 21 | 5 |

| Erkenntnis | 0 | 17 | 13 | 2 | 7 | 4 |

| Mind & Lang | 0 | 1 | 1 | 5 | 1 | 28 |

| Biology & Phil | 0 | 30 | 4 | 0 | 0 | 0 |

| AJP | 0 | 1 | 8 | 4 | 11 | 6 |

| BJPS | 0 | 17 | 9 | 0 | 1 | 1 |

| Nous | 1 | 1 | 7 | 3 | 6 | 7 |

| Ethics | 21 | 0 | 0 | 0 | 2 | 0 |

| Analysis | 1 | 0 | 3 | 2 | 5 | 10 |

| Phil Psych | 1 | 16 | 1 | 2 | 1 | 0 |

| J Phil | 4 | 1 | 10 | 0 | 3 | 1 |

| Mind | 2 | 0 | 0 | 7 | 5 | 5 |

| J of Pol Phil | 16 | 0 | 0 | 0 | 0 | 0 |

| Phil Review | 1 | 0 | 6 | 1 | 6 | 2 |

| CJP | 5 | 0 | 3 | 0 | 3 | 4 |

| Econ and Phil | 12 | 3 | 0 | 0 | 0 | 0 |

| EJP | 1 | 0 | 0 | 8 | 5 | 1 |

A few quick notes about this data.

- It all comes from Web of Science, and as I’ve discussed previously, there are flaws with that data. I think the flaws don’t make a huge difference to the points I’m making, but they exist.

- I’ve tried to sort the citations to Nous from the citations to Philosophical Issues and Philosophical Perspectives, which Web of Science sometimes (but only sometimes) collapses.

- These are the journals that most cited the six articles in Web of Science. If a journal that Web of Science doesn’t index cited the six of them 15 or more times, I wouldn’t know about it. And Web of Science added a lot of journals in 2008, so even some journals it now indexes won’t appear there.

The last point affects one thing that might jump out at you from that list, or at least jumped out at me. These are almost all pretty high prestige journals. And, conversely, almost all the high prestige journals are on the list. (Philosophical Quarterly is probably the most notable omission, relative to my personal ranking of journals.) You can imagine a world where the really significant articles of 15-20 years ago are discussed much more in lower ranking journals, perhaps because they are mimicking what was going on in elite journals some time before. A priori, I wouldn’t have been surprised to find we were in such a world. But this is some (weak) evidence against it.

But what I really want to stress is how uneven the pattern is here. The two most cited articles of those six, by a lot, are the Anderson, and the Machamer et al. Here are the citations each has on Google Scholar, and on Web of Science as of today. (These numbers are sure to change in the future.)

| Article | Google Cites | Web of Science |

|---|---|---|

| Anderson | 2104 | 552 |

| Machamer et al | 1844 | 668 |

| Lewis | 703 | 183 |

| Martin | 490 | 163 |

| Pryor | 768 | 268 |

| Stanley and Szabó | 777 | 239 |

So in terms of their impact on the journals collectively, those two articles have each been something like three times more influential than the other four articles. And those ‘other four articles’ rank somewhere between essential and field-defining in their importance to their subfields. And both the Anderson, and the Machamer et al, articles are in fields that I thought were pretty central to contemporary philosophy: political philosophy and philosophy of science. So they should be cited a lot in the ‘generalist’ journals, right? Right? Well, here’s the table above, this time with articles 1-2 collapses and articles 3-6 collapsed, and just the ‘generalist’ journals listed, and with the sort by the number of times articles 1 + 2 were cited.

| Journal | Cites to 1 + 2 | Cites to 3-6 |

|---|---|---|

| Phil Studies | 10 | 104 |

| PPR | 5 | 41 |

| J Phil | 5 | 14 |

| CJP | 5 | 10 |

| Nous | 2 | 23 |

| Mind | 2 | 17 |

| AJP | 1 | 29 |

| Analysis | 1 | 20 |

| Phil Review | 1 | 15 |

| EJP | 1 | 14 |

It’s only six articles, and I guess anything can happen in a small sample, but it was enough to suggest to me a hypothesis:

There is no such thing as a generalist philosophy journal.

How could we test this? Here was one way that I thought of. Philosophy has journals that are recognised as specialist journals. Some of them, like Ethics and Philosophy of Science are roughly as high status as the generalist journals. We could measure how often articles in each journal cite other journals. If there was a generalist journal, it should routinely cite the high status specialist journals, and it should be routinely cited in both generalist and specialist journals.

So to that end I decided, again using Web of Science, to make a giant database of which journals cited which other journals. To avoid the problem that Web of Science can be a bit erratic in when it updates, I decided to focus on citations where both the cited article and the citing article were published between 1976 and 2015. And I focussed just on journals that Web of Science had indexed throughout that range, or (if they started after 1976) which Web of Science indexed from their foundation.

I chose 30 journals that were a mix of generalist journals and specialist journals. In part the choice was influenced by what Web of Science had available. And in part it was based on a deliberate over-sampling of specialist journals, because what I really cared about is the interaction between generalist and specialist journals. Here is the list of 30 I ended up with.

| Abbreviation | Journal |

|---|---|

| BJA | British Journal of Aesthetics |

| JAAC | Journal of Aesthetics and Art Criticism |

| JHP | Journal of the History of Philosophy |

| PHR | Phronesis |

| KS | Kant Studien |

| JPP | Journal of Political Philosophy |

| PPA | Philosophy and Public Affairs |

| ETH | Ethics |

| BP | Biology and Philosophy |

| PSCI | Philosophy of Science |

| BJPS | British Journal for the Philosophy of Science |

| SYN | Synthese |

| EP | Economics and Philosophy |

| RSL | Review of Symbolic Logic |

| JPL | Journal of Philosophical Logic |

| RM | Review of Metaphysics |

| EPI | Episteme |

| LP | Linguistics and Philosophy |

| ML | Mind and Language |

| Mind | Mind |

| AN | Analysis |

| PQ | Philosophical Quarterly |

| AJP | Australasian Journal of Philosophy |

| PS | Philosophical Studies |

| PPR | Philosophy and Phenomenological Research |

| Nous | Nous |

| PR | Philosophical Review |

| JP | The Journal of Philosophy |

| PPQ | Pacific Philosophical Quarterly |

| SJP | Southern Journal of Philosophy |

| APQ | American Philosophical Quarterly |

| CJP | Canadian Journal of Philosophy |

In part because it was easier to collect the data this way, and in part because I think it is a more meaningful measure, I didn’t count cites of more than one article from a journal in a given year, in a particular citing article. So if an article cited both my Intrinsic Properties and Combinatorial Principles, and David Lewis’s Redefining ‘Intrinsic’, both of which are PPR 2001, that would count as citing PPR once. I think that’s the right way to measure things; otherwise we’ll end up treating engagement with a symposium as a bigger deal than it really is.

And, as mentioned above, I separated out the Nous citations from Philosophical Issues and Philosophical Perspectives.

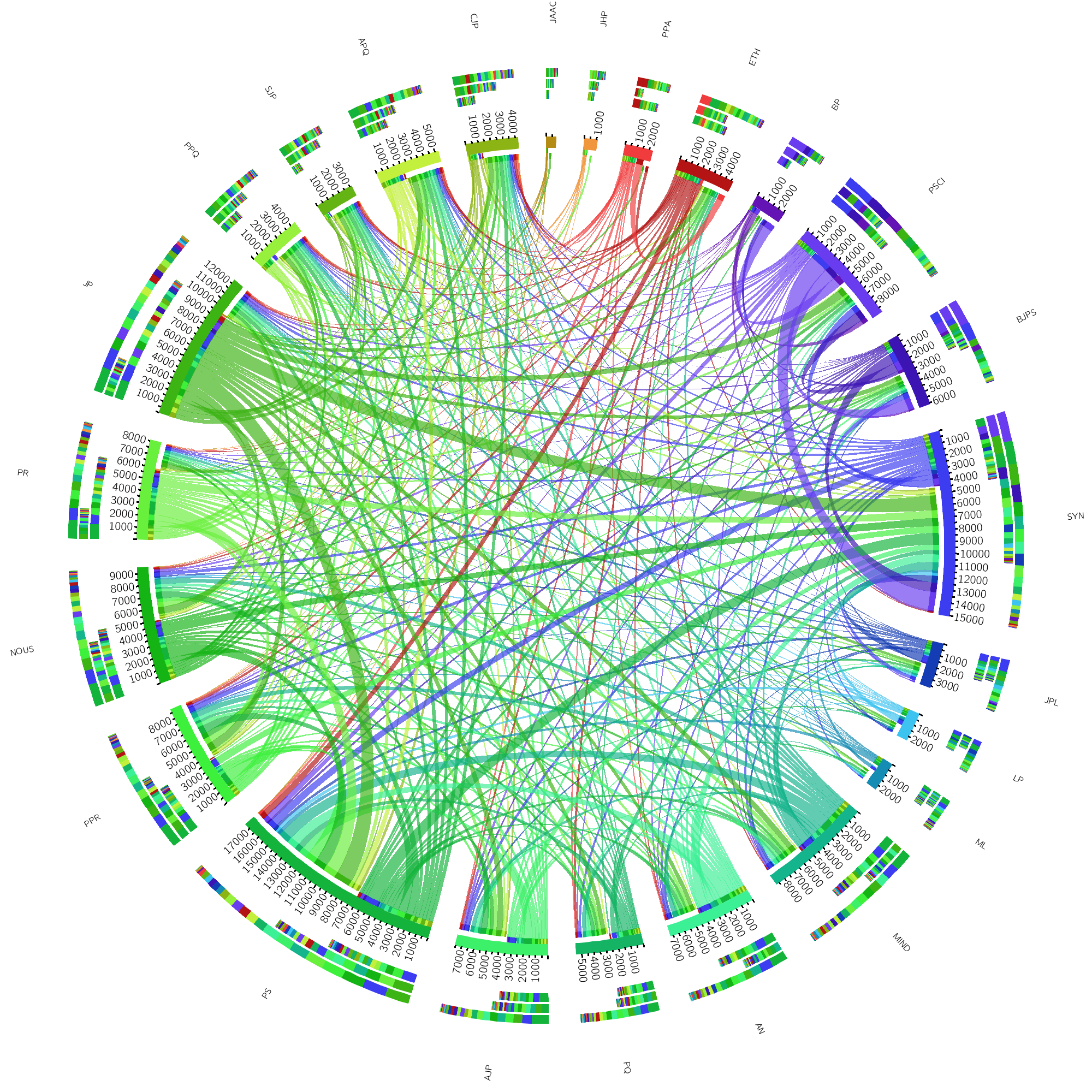

I fed this data into the (amazing) Circos Table Viewer, and here’s the first of the outputs. (Click on any image to get a higher res version.)

Between each (distinct) pair of journals, there are two lines, showing how often they cite each other. The lines go from the outer circle to the inner circle, and represent how many times the journal on the outer circle is cited by the journal on the inner circle. So you can see at a glance, for instance, that among this group, the Journal of Philosophy is cited many more times than it cites other journals, and the Canadian Journal of Philosophy cites other journals (in this group) much more often than it is cited by them.

The graph is a bit of a mess, so I tidied it up in two ways. First, I removed 8 journals that weren’t adding a lot to the graph, because they had very similar patterns to other journals that I included. (The 8 are BJA, PHR, KS, JPP, EP, RSL, RM, and EPI.) We’ll come back to those 8 in a bit, but for now I want to focus on the 22 remaining. Second, I used Circos’s feature that lets you cut out the thinnest 50% of the lines, so we can focus a bit more on what’s remaining. Here’s the result:

And we see a large scale version of what the six articles above suggested. With perhaps the exception of Philosophical Studies, there are very few journals that have strong connections to both the leading political philosophy journal, Philosophy and Public Affairs, and the leading Philosophy of Science journals, Philosophy of Science and BJPS. What really surprised me was how weak the links to Ethics are. I’m not surprised that some generalist journals don’t publish much aesthetics, feminist philosophy or history of philosophy: I knew those were gaps in coverage. I was surprised at how little philosophy of science, political philosophy, and even ethics, there seems to be in some leading journals.

I’ll write more about this in future posts, including deeper dives into which journals do and don’t interact with which specialist journals, and some important caveats to the no generalist journals conclusion. (Spoiler alert: Past performance is not necessarily a guide to future results.)

And I’ll do a long look at how the journals have changed over time. The four takeaways will be:

- The Journal of Philosophy was unbelievably influential in the 1980s, but is now merely one leading journal among many.

- Stewart Cohen has done an incredible job with Philosophical Studies. (This is a bit of a recurring theme actually.)

- David Lewis gets cited a lot. It ends up being important to remember when the Lewis articles appear when getting a sense of the impact of various journals across time.

- The interactions between generalist and specialist journals don’t change a lot over time, but they do change a bit, and some of the differences are worth pausing over.

Thanks to Justin for posting this. I’m very interested to see what kind of responses the readers here have to it.

Thanks too to the good folks at Circos, especially Martin Krzywinski, for making the software (and the website) that makes these graphs possible. For more information on that software, see

Krzywinski, M. et al. Circos: an Information Aesthetic for Comparative Genomics. Genome Res (2009) 19:1639-1645

I have a million caveats I’d probably add to the post, and which I will add in future posts at TAR. But they can be summed up fairly easily: the main thesis here is a historical generic. It’s historical, so it really isn’t making a claim about how things are right now. New journals are born, and old journals change, and it’s dangerous to forecast that they will be now just like they were in the past. And it’s generic, so it allows for exceptions.

And, of course, I’ve just put some data forward here. There could be plenty of data that points in the opposite direction. And if there is, I’d love to see it. I’ve been investigating at a fairly coarse grained level; at the journal level not the paper level. It would be really interesting to see studies (perhaps using PhilPapers data?) that was more fine-grained than mine.

I’m not sure I’m fully understanding the chord diagrams. Does this take into account disparity in number of articles published between journals? For example, Phil Studies publishes a lot more than Phil Review; Is there any adjustment for that?

I think the conclusion that there are no generalist journals is really important to grapple with in terms of how the field evaluates research outputs though.

No it doesn’t take that disparity into account. I want to write something about that in a follow up.

There are two big challenges here.

One is that the various databases tend to include book reviews alongside regular articles, and I couldn’t figure out a way to automatically separate them. (And I couldn’t imagine sorting the lists for hundreds of journal-years by hand.) I thought that I could perhaps sort the articles by page length, assuming I could automatically get the page length for various articles. But Phil Review publishes discussion notes that are shorter than some book reviews. So I don’t know what to do.

The other is whether discussion notes, and contributions to book symposia, should count as articles. They get cited, and in many ways they look like articles. But they get cited less than regular articles (even accounting for length), and they cite many fewer other articles than regular articles. So maybe we should exclude them. But then you get ‘discussions’ like Jack Spencer’s “Disagreement and Attitudinal Relativism”, which is 28 pages long and has dozens of citations, and it seems absurd to not count it as an article.

So I don’t know how to ideally take publishing disparity into account, and even if I had an idea about how to do so in theory, I don’t know how to do it in practice. I’m a bit stuck.

Wow, fascinating work here Brian, thanks.

However, I’m not that surprised by what you find. The top ‘generalist’ journals have for a long time been the only very high-status places (and remain the highest status venues) to publish M&E /LEMM. So they predictably get the lion’s share of submissions in those areas, which in turn probably serves to crowd out a lot of non-LEMM work… whereas most work in ethics and political (etc.) can be distributed among the ‘generalist’ journals plus Ethics and PPA, and might even be sent to these latter places first. Over time, we’d get the patterns you find, right?

In other words, the top ‘generalist’ journals have been a bit like de facto specialist LEMM journals, which also occasionally publish some other stuff. )That’s changing, of course: e.g., in M&E, Oxford Studies in Metaphysics, or Oxford Studies in Epistemology, or Episteme, etc. are becoming very strong specialist venues.)

The high status of specialist journals in a field does seem to be correlated with the under-representation of that field in generalist journals. It’s really hard to separate cause and effect here, but there is a correlation. Indeed, I think the rise in impact of Mind & Language over recent times is happening alongside there being less philosophy of mind work in the generalist journals. I don’t know whether the existence of Episteme, and the Oxford Studies yearlies, will have the same impact in those fields.

Mind and Language shouldn’t be having this effect on generalist journals, given that it is more a philosophy of cognitive science than a philosophy of mind journal.

This is a great idea for a study–thank you for sharing. I find the graphics beautiful but difficult to read, in part because I am not sure that I understand all of the visual elements. What do the colors represent, for example? My guess is that each citing journal gets a color and citing journals are coded as something like green for generalist, red for ethics, and blue for science. Is that right? And what are the outer bars, beyond the inner and outer circles? Is the point of these to capture the number of citations by each journal in a single dimension? What determines the order of the colors in those bars? It might be good to add a key to the graphics to improve readability…I also think the full name of the journal in at least some cases would be helpful.

Good questions!

Each cited journal gets a colour, so the big green bars coming out from JP are citations of the Journal of Philosophy.

The colour coding is a rather arbitrary. To the extent there is a pattern, the idea is greens roughly for generalist journals, going through blue for journals like Mind & Language, and Linguistics & Philosophy, through to purple for philosophy of science, then reds and oranges for ethics, political, history and aesthetics.

The three outer bars are (working from outside in)

1) All connections

2) Times the journal cites other journals

3) Times the journal is cited by other journals

So 1 is 2+3. Within each bar, the ordering is by size of the connection; that is the largest numbers are first. So you can sort of see, just by looking at how far done the line the purples and reds are, how little citation there is of the really specialist journals.

The various greens are not easy to distinguish for most people (and I imagine impossible for several people), and that’s a real downside of the graph. I couldn’t figure out a way to have 22 things on the graph, and have the various categories of journals have very different colours, and have all the journals have clearly distinct colours. I’m sure someone with better design sense than I could do much better, but this is already a big improvement over my first n tries.

And yeah, I probably use abbreviations much too much, and should spell things out more.

Very interesting analysis! I wonder if social network analysis of Stanford Encyclopedia citations would reveal the same thing — that is, tight co-citation of topically related entries and not nearly as much citation of the same sources among topically different entries? Alternatively, maybe when you go back far enough in time you get a lot of co-citation of the classics, e.g. Rawls, Kripke, Kuhn…. (Now that I try to think of 20th century names, I’m guessing not.)

When Kieran Healy’s network analysis of The Big Four Journals was making the social media rounds, I argued (in a much less sophisticated, and more narrow way) that those 4 journals are not generalist:

https://obscureandconfused.blogspot.com/2013/06/what-is-generalist-journal.html

The short version: in Healy’s dataset of 526 most-cited articles from The Big Four over the last two decades, only 1.7%-2.3% are in philosophy of science and mathematics.

FWIW, I cited Stanley and Szabo in an Ethics article published in 2010, though the above data give them 0 cites in Ethics.

That is very odd. I looked back, and it looks like Web of Science completely failed to index the April 2010 issue of Ethics – all of the articles in it are listed as having 0 citations in them. I hope there aren’t too many other bugs like this. And I’m sorry I hadn’t already noticed it; something that glaring should have popped out of the data at me.

Thanks for letting me know about it – I’ll have to look for more errors like that in the databases.

What really surprises me is that Google Scholar doesn’t have this reference either. I think something went funny with that whole issue of Ethics – hopefully the record can get corrected shortly.

That is really odd! Google Scholar did find my article from that issue when it shows me my papers and how many cites they have.

Web of Science is like that too. It shows the citations your paper has, but not what it cites. I wrote to them pointing out the bug, but haven’t heard back from them. I hope it gets fixed.