UK’s Research Excellence Framework (REF) & Philosophy

A few days ago, the results from the UK government’s 2014 Research Excellence Framework (REF) exercise were released. I suspect that a lot of non-UK readers are largely unfamiliar with the REF, so first some basics:

The REF is used by the government to assess the quality of research in UK higher education institutions. It replaced the earlier Research Assesment Exercise (RAE). According to the REF website:

- The four higher education funding bodies will use the assessment outcomes to inform the selective allocation of their grant for research to the institutions which they fund, with effect from 2015-16.

- The assessment provides accountability for public investment in research and produces evidence of the benefits of this investment.

- The assessment outcomes provide benchmarking information and establish reputational yardsticks, for use within the higher education (HE) sector and for public information.

There are three elements that are assessed:

1. Outputs: publications. For each person submitted, a department must (normally) submit 4 publications. Departments can choose to not submit people, and this time around many did this—which is every bit as unpleasant as one might imagine, for everyone. Departments can also submit anyone who’s on even a 20% contract, and get full credit for their outputs. Some did a lot of this.

2. Impact: non-academic impact of research done in the last 15 years.

3. Research environment: the “vitality and sustainability” of the particular departments or other units.

All elements (right down to individual publications) are ranked as 1*-4*, with 4* as best. (Thanks to Jenny Saul for this simple explanation.)

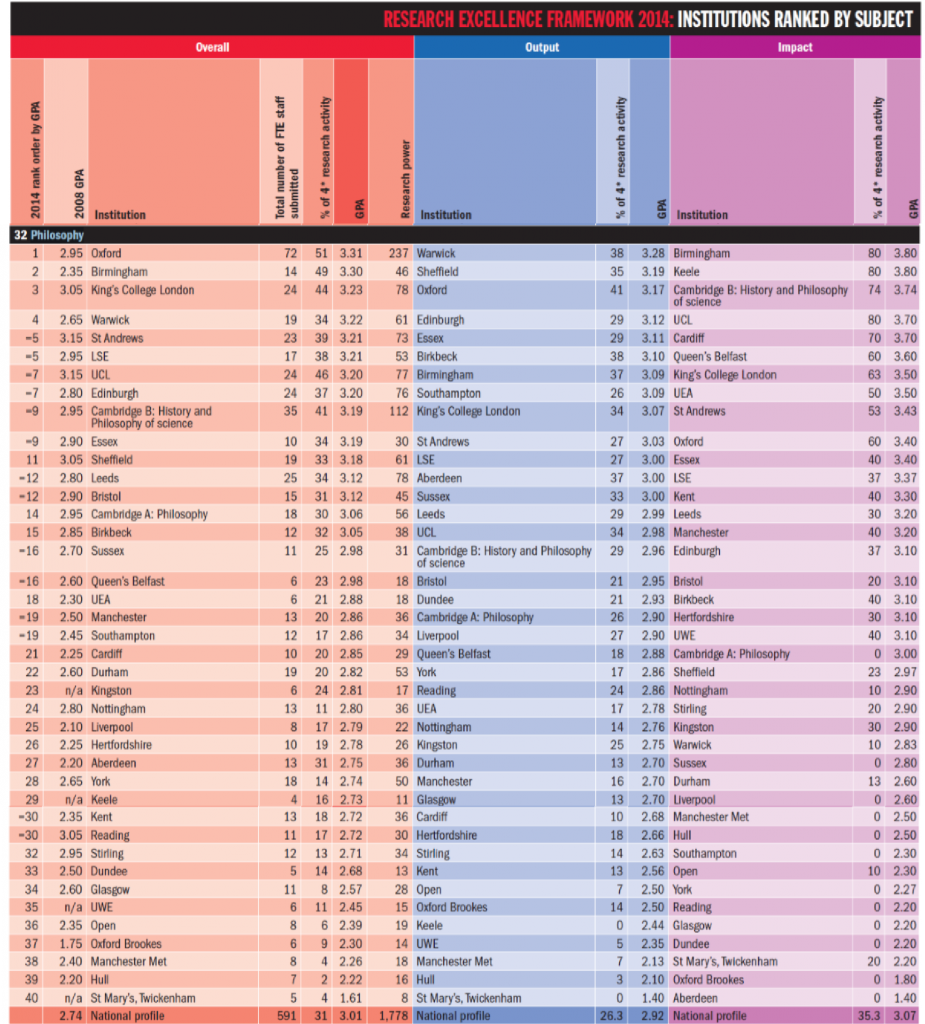

On the following table (from pages 34-35 of a PDF available at the Times Higher Education Supplement), there are three rankings: overall average, just outputs, and just impact.

There are also other ways of ranking.

Michael Otsuka wrote in recommending a different formula which does not give large departments a “gratuitous advantage simply for being large.” He suggests one “that just takes the percentage of a Department’s overall quality profile ranked 4*, multiplies that by 3, and adds that to the percentage of that Department’s overall quality profile ranked 3*. This reflects the government’s current formula of funding just 3* and 4* research, with 4* research funded at 3 times 3* research. But it treats all quality profiles of Departments as equal, however many people were submitted.”

The ranking Otsuka’s suggestion generates is:

1. University of Oxford

2. University of Birmingham

3. King’s College London

4. University College London

5. University of Cambridge HPS

6. University of St Andrews

7. London School of Economics and Political Science

8. University of Edinburgh

=8. University of Warwick

9. University of Essex

10. University of Sheffield

11. University of Leeds

12. University of Bristol

13. University of Cambridge Phil

14. Birkbeck College

(Raw data is available here.)

Funding decisions by the UK government will be based on the REF, but my understanding is that it is at this point unclear which particular ranking will be used to determine the allocation of funds. Discussion of any aspects of the REF and its use are welcome. (Posted by request.)

One issue I have heard raised is that only some of the departments listed all of their faculty in this report, depending in many cases on research quality. If that is true, then a department that thought 72 members of its faculty had high quality work would seem to be much better off than one that thought only 10 members of its faculty had high quality work. Put another way, dividing by numbers of faculty might unfairly give an advantage to those departments that provided partial reports based on select faculty. But perhaps I am misunderstanding Michael’s method.

The problem with Michael’s metric is that it rewards some department’s tactics of just returning their ‘best’ staff and not the ones that were perceived to have weaker outputs. I wouldn’t want any assessment to reward size for the sake of it, but unless there is some indication of proportion of staff returned then it will be misleading.

Another complication is that ‘units of assessment’ don’t map perfectly on to departments. At UCL, for example, we returned five philosophers of science from the Science and Technology Studies department as part of the Philosophy submission, as well as one medical ethicist from the medical school. In January the full submissions are scheduled to be published and so it will then be possible to look at details like this, but the effect on ranking will be a matter of speculation as scores of individuals are never given.

I have also been told that the allocation of research funds to Scottish universities goes through another mechanism, and so all that is at stake for them is league table position, which in some, but by no means all, cases has led to a more tactical approach to submission.

In response to Carolyn Dicey Jennings and Stuart Elden, on figures I have seen it is clear that some departments have left out a proportion of their staff compared to the official ‘HESA’ data on staff numbers. But these figures are notoriously inaccurate: on the figures I have Oxford returned 110% of their staff, Aberdeen 73% and all major departments ranged in between, which is a surprise as in some cases it looks like more staff than this have been left out. Those interested will be able to check in more detail in January.

As far as I can tell there is pretty much no difference between the ranking Otsuka generates and the original one. There are several problems with his simple methodology. One is that the large, rich departments can afford to hire ‘stars’ on a 20% contract just to enter them into the REF and thereby boost their performance. Another is that large, rich departments can cherry pick the staff they enter into the competition: since they have many members they can just select those who produce the highest quality research. All in all, the REF, instead of measuring real performance, turns out to be just the perfect device to maintain the status quo; it does not really reward the small departments who often worked much harder just to maintain their position in the ranking.

Forgot to add: Members of a small department typically have higher teaching and admin load than the large ones who can just keep their top researchers relatively free to their top research. Another consideration that is not, and probably cannot be, considered in rankings like the REF or Otsuka’s.

Attila: Justin quotes some remarks I made on the other blog, where I was actually comparing the ranking that I suggested with a ranking other than the THES ranking which, I agree, is similar to the one I suggested. Stuart, I also noted, in my remarks on the other blog, that all rankings are distorted by the fact that they don’t reflect the actual to eligible submission rate. The perils of being quoted out of context.

Apologies to Carolyn Dicey Jennings for not noticing that she also raises the issue of submission rates. I say more about this topic — in particular, on decisions to exclude faculty members from the REF in order not to drive down numbers on some of the more prominent rankings — in this public Facebook post: https://www.facebook.com/mike.otsuka.9/posts/312009802332619

A ranking of departments according to ‘intensity-weighted GPA’ has now been published. This takes into account the percentage of eligible staff actually submitted for assessment, thus addressing the concern expressed by Carolyn Dicey Jennings above. Oxford comes first on this ranking, but as Jo Wolff indicates, the figure of eligible staff on which this is based MUST be wrong, since they are down as having submitted 72 people with only 65 eligible. Is anyone able to share with us the the correct figure for eligible staff at Oxford? The same goes for Cambridge History and Philosophy of Science (35 submitted and 34 eligible) and Cambridge Philosophy (18 submitted, 17 eligible). I can confirm that the figure for UCL (24 eligible and 24 submitted) is correct. On this ranking, as it stands, UCL and Cambridge History and Philosophy of Science are joint second.